mirror of https://github.com/minio/minio.git

cleanup markdown docs across multiple files (#14296)

enable markdown-linter

This commit is contained in:

parent

2c0f121550

commit

e3e0532613

|

|

@ -0,0 +1,25 @@

|

|||

name: Markdown Linter

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

concurrency:

|

||||

group: ${{ github.workflow }}-${{ github.head_ref }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

lint:

|

||||

name: Lint all docs

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Check out code

|

||||

uses: actions/checkout@v2

|

||||

|

||||

- name: Lint all docs

|

||||

run: |

|

||||

npm install -g markdownlint-cli

|

||||

markdownlint --fix '**/*.md' --disable MD013 MD025 MD040 MD024

|

||||

|

|

@ -1,4 +1,5 @@

|

|||

# AGPLv3 Compliance

|

||||

|

||||

We have designed MinIO as an Open Source software for the Open Source software community. This requires applications to consider whether their usage of MinIO is in compliance with the GNU AGPLv3 [license](https://github.com/minio/minio/blob/master/LICENSE).

|

||||

|

||||

MinIO cannot make the determination as to whether your application's usage of MinIO is in compliance with the AGPLv3 license requirements. You should instead rely on your own legal counsel or licensing specialists to audit and ensure your application is in compliance with the licenses of MinIO and all other open-source projects with which your application integrates or interacts. We understand that AGPLv3 licensing is complex and nuanced. It is for that reason we strongly encourage using experts in licensing to make any such determinations around compliance instead of relying on apocryphal or anecdotal advice.

|

||||

|

|

|

|||

|

|

@ -7,15 +7,17 @@

|

|||

Start by forking the MinIO GitHub repository, make changes in a branch and then send a pull request. We encourage pull requests to discuss code changes. Here are the steps in details:

|

||||

|

||||

### Setup your MinIO GitHub Repository

|

||||

|

||||

Fork [MinIO upstream](https://github.com/minio/minio/fork) source repository to your own personal repository. Copy the URL of your MinIO fork (you will need it for the `git clone` command below).

|

||||

|

||||

```sh

|

||||

$ git clone https://github.com/minio/minio

|

||||

$ go install -v

|

||||

$ ls /go/bin/minio

|

||||

git clone https://github.com/minio/minio

|

||||

go install -v

|

||||

ls /go/bin/minio

|

||||

```

|

||||

|

||||

### Set up git remote as ``upstream``

|

||||

|

||||

```sh

|

||||

$ cd minio

|

||||

$ git remote add upstream https://github.com/minio/minio

|

||||

|

|

@ -25,13 +27,15 @@ $ git merge upstream/master

|

|||

```

|

||||

|

||||

### Create your feature branch

|

||||

|

||||

Before making code changes, make sure you create a separate branch for these changes

|

||||

|

||||

```

|

||||

$ git checkout -b my-new-feature

|

||||

git checkout -b my-new-feature

|

||||

```

|

||||

|

||||

### Test MinIO server changes

|

||||

|

||||

After your code changes, make sure

|

||||

|

||||

- To add test cases for the new code. If you have questions about how to do it, please ask on our [Slack](https://slack.min.io) channel.

|

||||

|

|

@ -40,29 +44,38 @@ After your code changes, make sure

|

|||

- To run `make test` and `make build` completes.

|

||||

|

||||

### Commit changes

|

||||

|

||||

After verification, commit your changes. This is a [great post](https://chris.beams.io/posts/git-commit/) on how to write useful commit messages

|

||||

|

||||

```

|

||||

$ git commit -am 'Add some feature'

|

||||

git commit -am 'Add some feature'

|

||||

```

|

||||

|

||||

### Push to the branch

|

||||

|

||||

Push your locally committed changes to the remote origin (your fork)

|

||||

|

||||

```

|

||||

$ git push origin my-new-feature

|

||||

git push origin my-new-feature

|

||||

```

|

||||

|

||||

### Create a Pull Request

|

||||

|

||||

Pull requests can be created via GitHub. Refer to [this document](https://help.github.com/articles/creating-a-pull-request/) for detailed steps on how to create a pull request. After a Pull Request gets peer reviewed and approved, it will be merged.

|

||||

|

||||

## FAQs

|

||||

|

||||

### How does ``MinIO`` manage dependencies?

|

||||

|

||||

``MinIO`` uses `go mod` to manage its dependencies.

|

||||

|

||||

- Run `go get foo/bar` in the source folder to add the dependency to `go.mod` file.

|

||||

|

||||

To remove a dependency

|

||||

|

||||

- Edit your code and remove the import reference.

|

||||

- Run `go mod tidy` in the source folder to remove dependency from `go.mod` file.

|

||||

|

||||

### What are the coding guidelines for MinIO?

|

||||

|

||||

``MinIO`` is fully conformant with Golang style. Refer: [Effective Go](https://github.com/golang/go/wiki/CodeReviewComments) article from Golang project. If you observe offending code, please feel free to send a pull request or ping us on [Slack](https://slack.min.io).

|

||||

|

|

|

|||

54

README.md

54

README.md

|

|

@ -1,4 +1,5 @@

|

|||

# MinIO Quickstart Guide

|

||||

|

||||

[](https://slack.min.io) [](https://hub.docker.com/r/minio/minio/) [](https://github.com/minio/minio/blob/master/LICENSE)

|

||||

|

||||

[](https://min.io)

|

||||

|

|

@ -26,12 +27,12 @@ podman run -p 9000:9000 -p 9001:9001 \

|

|||

```

|

||||

|

||||

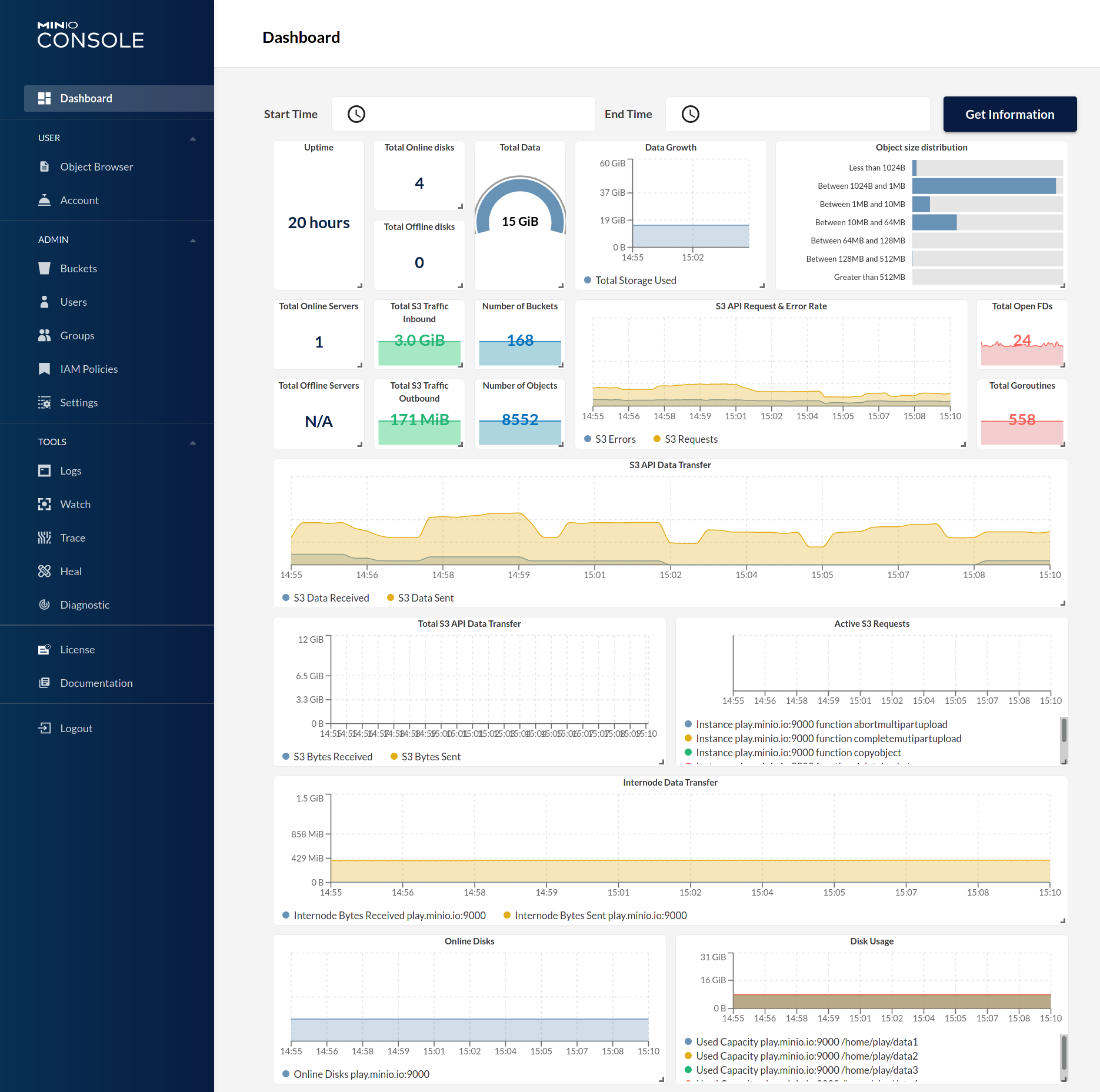

The MinIO deployment starts using default root credentials `minioadmin:minioadmin`. You can test the deployment using the MinIO Console, an embedded

|

||||

object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the

|

||||

object browser built into MinIO Server. Point a web browser running on the host machine to <http://127.0.0.1:9000> and log in with the

|

||||

root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

|

||||

|

||||

You can also connect using any S3-compatible tool, such as the MinIO Client `mc` commandline tool. See

|

||||

[Test using MinIO Client `mc`](#test-using-minio-client-mc) for more information on using the `mc` commandline tool. For application developers,

|

||||

see https://docs.min.io/docs/ and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

see <https://docs.min.io/docs/> and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

|

||||

> NOTE: To deploy MinIO on with persistent storage, you must map local persistent directories from the host OS to the container using the `podman -v` option. For example, `-v /mnt/data:/data` maps the host OS drive at `/mnt/data` to `/data` on the container.

|

||||

|

||||

|

|

@ -57,9 +58,9 @@ brew uninstall minio

|

|||

brew install minio/stable/minio

|

||||

```

|

||||

|

||||

The MinIO deployment starts using default root credentials `minioadmin:minioadmin`. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

|

||||

The MinIO deployment starts using default root credentials `minioadmin:minioadmin`. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to <http://127.0.0.1:9000> and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

|

||||

|

||||

You can also connect using any S3-compatible tool, such as the MinIO Client `mc` commandline tool. See [Test using MinIO Client `mc`](#test-using-minio-client-mc) for more information on using the `mc` commandline tool. For application developers, see https://docs.min.io/docs/ and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

You can also connect using any S3-compatible tool, such as the MinIO Client `mc` commandline tool. See [Test using MinIO Client `mc`](#test-using-minio-client-mc) for more information on using the `mc` commandline tool. For application developers, see <https://docs.min.io/docs/> and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

|

||||

## Binary Download

|

||||

|

||||

|

|

@ -71,9 +72,9 @@ chmod +x minio

|

|||

./minio server /data

|

||||

```

|

||||

|

||||

The MinIO deployment starts using default root credentials `minioadmin:minioadmin`. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

|

||||

The MinIO deployment starts using default root credentials `minioadmin:minioadmin`. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to <http://127.0.0.1:9000> and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

|

||||

|

||||

You can also connect using any S3-compatible tool, such as the MinIO Client `mc` commandline tool. See [Test using MinIO Client `mc`](#test-using-minio-client-mc) for more information on using the `mc` commandline tool. For application developers, see https://docs.min.io/docs/ and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

You can also connect using any S3-compatible tool, such as the MinIO Client `mc` commandline tool. See [Test using MinIO Client `mc`](#test-using-minio-client-mc) for more information on using the `mc` commandline tool. For application developers, see <https://docs.min.io/docs/> and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

|

||||

# GNU/Linux

|

||||

|

||||

|

|

@ -91,14 +92,14 @@ The following table lists supported architectures. Replace the `wget` URL with t

|

|||

|

||||

| Architecture | URL |

|

||||

| -------- | ------ |

|

||||

| 64-bit Intel/AMD | https://dl.min.io/server/minio/release/linux-amd64/minio |

|

||||

| 64-bit ARM | https://dl.min.io/server/minio/release/linux-arm64/minio |

|

||||

| 64-bit PowerPC LE (ppc64le) | https://dl.min.io/server/minio/release/linux-ppc64le/minio |

|

||||

| IBM Z-Series (S390X) | https://dl.min.io/server/minio/release/linux-s390x/minio |

|

||||

| 64-bit Intel/AMD | <https://dl.min.io/server/minio/release/linux-amd64/minio> |

|

||||

| 64-bit ARM | <https://dl.min.io/server/minio/release/linux-arm64/minio> |

|

||||

| 64-bit PowerPC LE (ppc64le) | <https://dl.min.io/server/minio/release/linux-ppc64le/minio> |

|

||||

| IBM Z-Series (S390X) | <https://dl.min.io/server/minio/release/linux-s390x/minio> |

|

||||

|

||||

The MinIO deployment starts using default root credentials `minioadmin:minioadmin`. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

|

||||

The MinIO deployment starts using default root credentials `minioadmin:minioadmin`. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to <http://127.0.0.1:9000> and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

|

||||

|

||||

You can also connect using any S3-compatible tool, such as the MinIO Client `mc` commandline tool. See [Test using MinIO Client `mc`](#test-using-minio-client-mc) for more information on using the `mc` commandline tool. For application developers, see https://docs.min.io/docs/ and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

You can also connect using any S3-compatible tool, such as the MinIO Client `mc` commandline tool. See [Test using MinIO Client `mc`](#test-using-minio-client-mc) for more information on using the `mc` commandline tool. For application developers, see <https://docs.min.io/docs/> and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

|

||||

> NOTE: Standalone MinIO servers are best suited for early development and evaluation. Certain features such as versioning, object locking, and bucket replication require distributed deploying MinIO with Erasure Coding. For extended development and production, deploy MinIO with Erasure Coding enabled - specifically, with a *minimum* of 4 drives per MinIO server. See [MinIO Erasure Code Quickstart Guide](https://docs.min.io/docs/minio-erasure-code-quickstart-guide.html) for more complete documentation.

|

||||

|

||||

|

|

@ -116,9 +117,9 @@ Use the following command to run a standalone MinIO server on the Windows host.

|

|||

minio.exe server D:\

|

||||

```

|

||||

|

||||

The MinIO deployment starts using default root credentials `minioadmin:minioadmin`. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

|

||||

The MinIO deployment starts using default root credentials `minioadmin:minioadmin`. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to <http://127.0.0.1:9000> and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

|

||||

|

||||

You can also connect using any S3-compatible tool, such as the MinIO Client `mc` commandline tool. See [Test using MinIO Client `mc`](#test-using-minio-client-mc) for more information on using the `mc` commandline tool. For application developers, see https://docs.min.io/docs/ and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

You can also connect using any S3-compatible tool, such as the MinIO Client `mc` commandline tool. See [Test using MinIO Client `mc`](#test-using-minio-client-mc) for more information on using the `mc` commandline tool. For application developers, see <https://docs.min.io/docs/> and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

|

||||

> NOTE: Standalone MinIO servers are best suited for early development and evaluation. Certain features such as versioning, object locking, and bucket replication require distributed deploying MinIO with Erasure Coding. For extended development and production, deploy MinIO with Erasure Coding enabled - specifically, with a *minimum* of 4 drives per MinIO server. See [MinIO Erasure Code Quickstart Guide](https://docs.min.io/docs/minio-erasure-code-quickstart-guide.html) for more complete documentation.

|

||||

|

||||

|

|

@ -130,9 +131,9 @@ Use the following commands to compile and run a standalone MinIO server from sou

|

|||

GO111MODULE=on go install github.com/minio/minio@latest

|

||||

```

|

||||

|

||||

The MinIO deployment starts using default root credentials `minioadmin:minioadmin`. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to http://127.0.0.1:9000 and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

|

||||

The MinIO deployment starts using default root credentials `minioadmin:minioadmin`. You can test the deployment using the MinIO Console, an embedded web-based object browser built into MinIO Server. Point a web browser running on the host machine to <http://127.0.0.1:9000> and log in with the root credentials. You can use the Browser to create buckets, upload objects, and browse the contents of the MinIO server.

|

||||

|

||||

You can also connect using any S3-compatible tool, such as the MinIO Client `mc` commandline tool. See [Test using MinIO Client `mc`](#test-using-minio-client-mc) for more information on using the `mc` commandline tool. For application developers, see https://docs.min.io/docs/ and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

You can also connect using any S3-compatible tool, such as the MinIO Client `mc` commandline tool. See [Test using MinIO Client `mc`](#test-using-minio-client-mc) for more information on using the `mc` commandline tool. For application developers, see <https://docs.min.io/docs/> and click **MinIO SDKs** in the navigation to view MinIO SDKs for supported languages.

|

||||

|

||||

> NOTE: Standalone MinIO servers are best suited for early development and evaluation. Certain features such as versioning, object locking, and bucket replication require distributed deploying MinIO with Erasure Coding. For extended development and production, deploy MinIO with Erasure Coding enabled - specifically, with a *minimum* of 4 drives per MinIO server. See [MinIO Erasure Code Quickstart Guide](https://docs.min.io/docs/minio-erasure-code-quickstart-guide.html) for more complete documentation.

|

||||

|

||||

|

|

@ -196,6 +197,7 @@ service iptables restart

|

|||

```

|

||||

|

||||

## Pre-existing data

|

||||

|

||||

When deployed on a single drive, MinIO server lets clients access any pre-existing data in the data directory. For example, if MinIO is started with the command `minio server /mnt/data`, any pre-existing data in the `/mnt/data` directory would be accessible to the clients.

|

||||

|

||||

The above statement is also valid for all gateway backends.

|

||||

|

|

@ -203,11 +205,13 @@ The above statement is also valid for all gateway backends.

|

|||

# Test MinIO Connectivity

|

||||

|

||||

## Test using MinIO Console

|

||||

MinIO Server comes with an embedded web based object browser. Point your web browser to http://127.0.0.1:9000 to ensure your server has started successfully.

|

||||

|

||||

MinIO Server comes with an embedded web based object browser. Point your web browser to <http://127.0.0.1:9000> to ensure your server has started successfully.

|

||||

|

||||

> NOTE: MinIO runs console on random port by default if you wish choose a specific port use `--console-address` to pick a specific interface and port.

|

||||

|

||||

### Things to consider

|

||||

|

||||

MinIO redirects browser access requests to the configured server port (i.e. `127.0.0.1:9000`) to the configured Console port. MinIO uses the hostname or IP address specified in the request when building the redirect URL. The URL and port *must* be accessible by the client for the redirection to work.

|

||||

|

||||

For deployments behind a load balancer, proxy, or ingress rule where the MinIO host IP address or port is not public, use the `MINIO_BROWSER_REDIRECT_URL` environment variable to specify the external hostname for the redirect. The LB/Proxy must have rules for directing traffic to the Console port specifically.

|

||||

|

|

@ -218,36 +222,40 @@ Similarly, if your TLS certificates do not have the IP SAN for the MinIO server

|

|||

|

||||

For example: `export MINIO_SERVER_URL="https://minio.example.net"`

|

||||

|

||||

|

||||

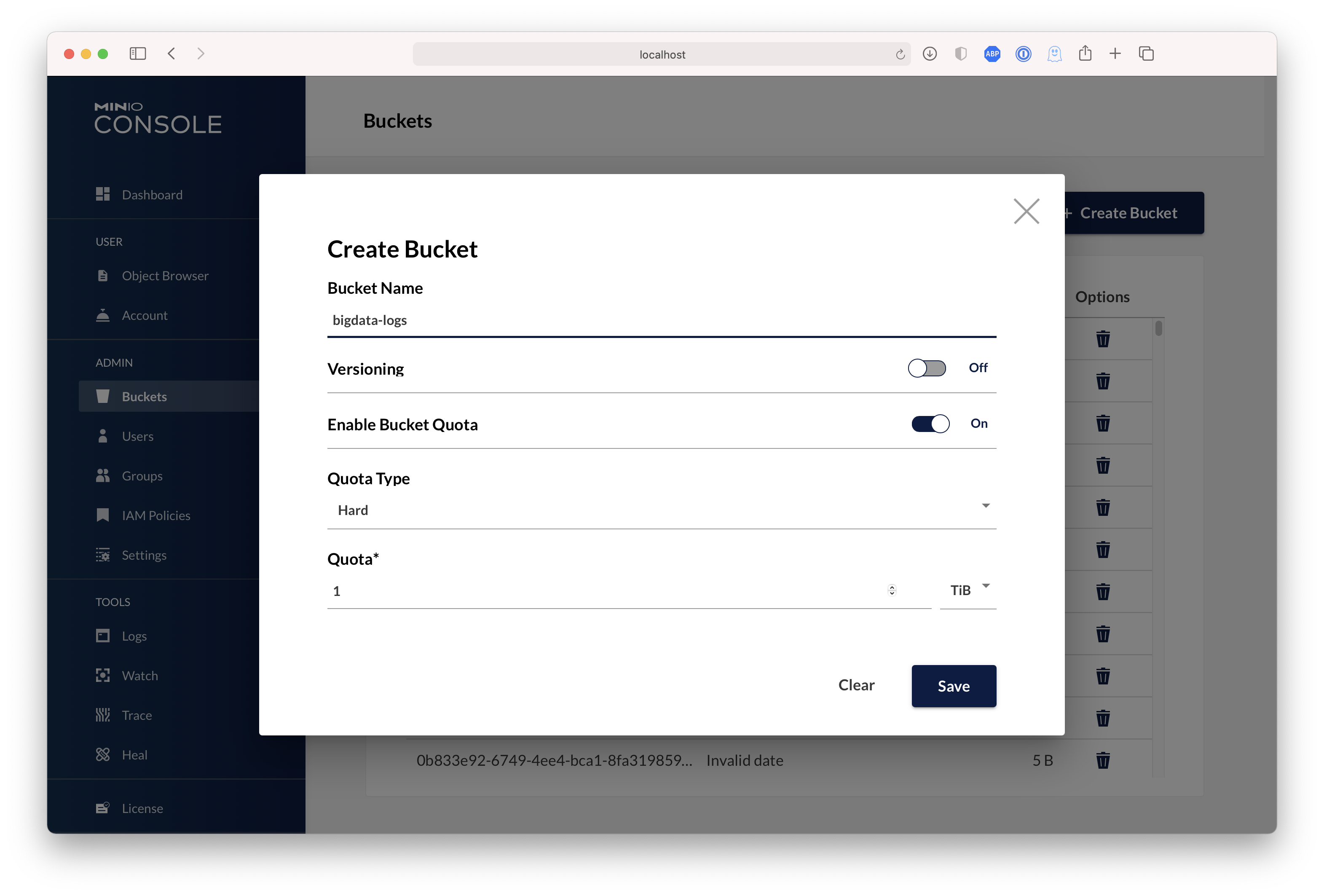

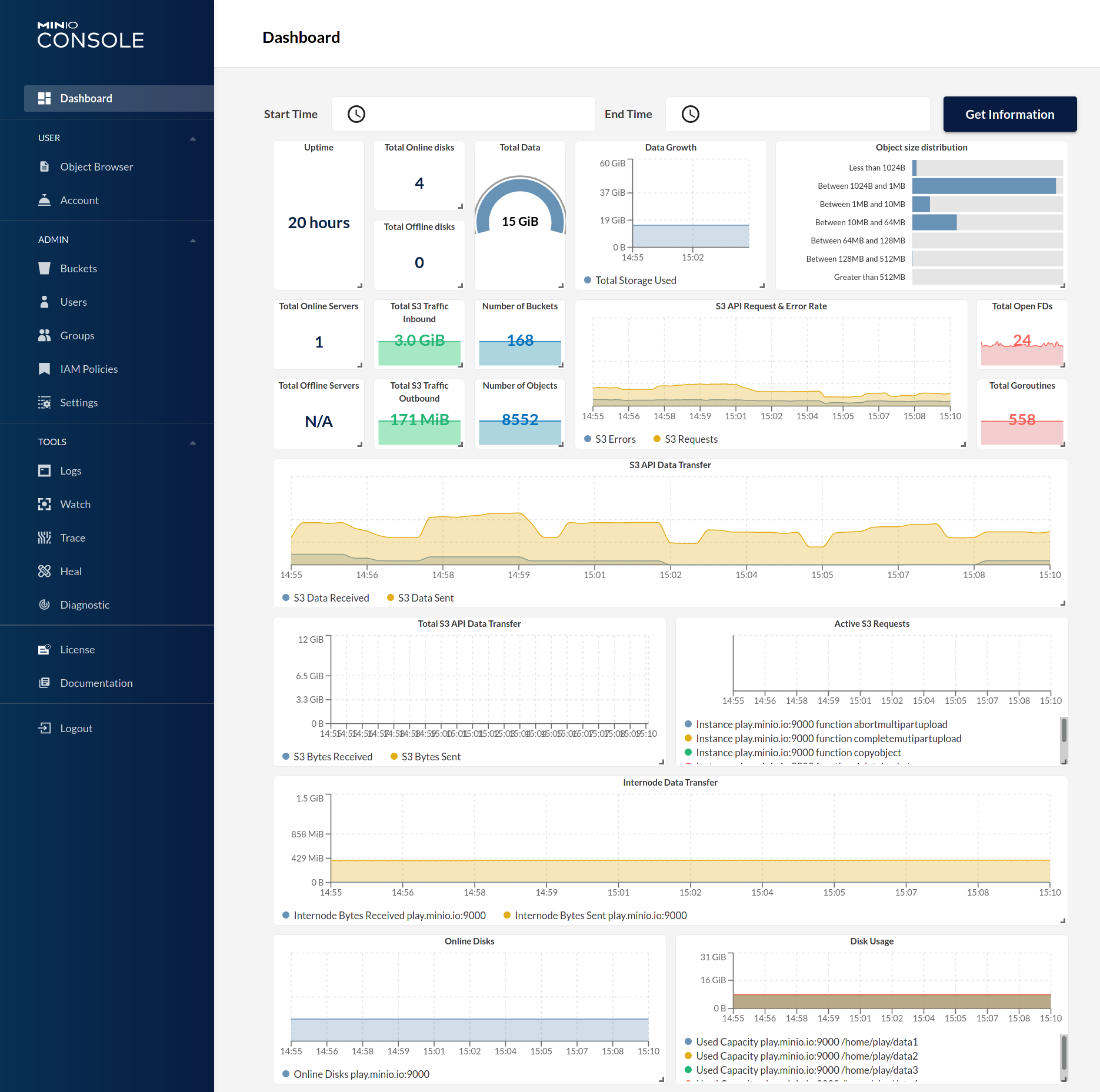

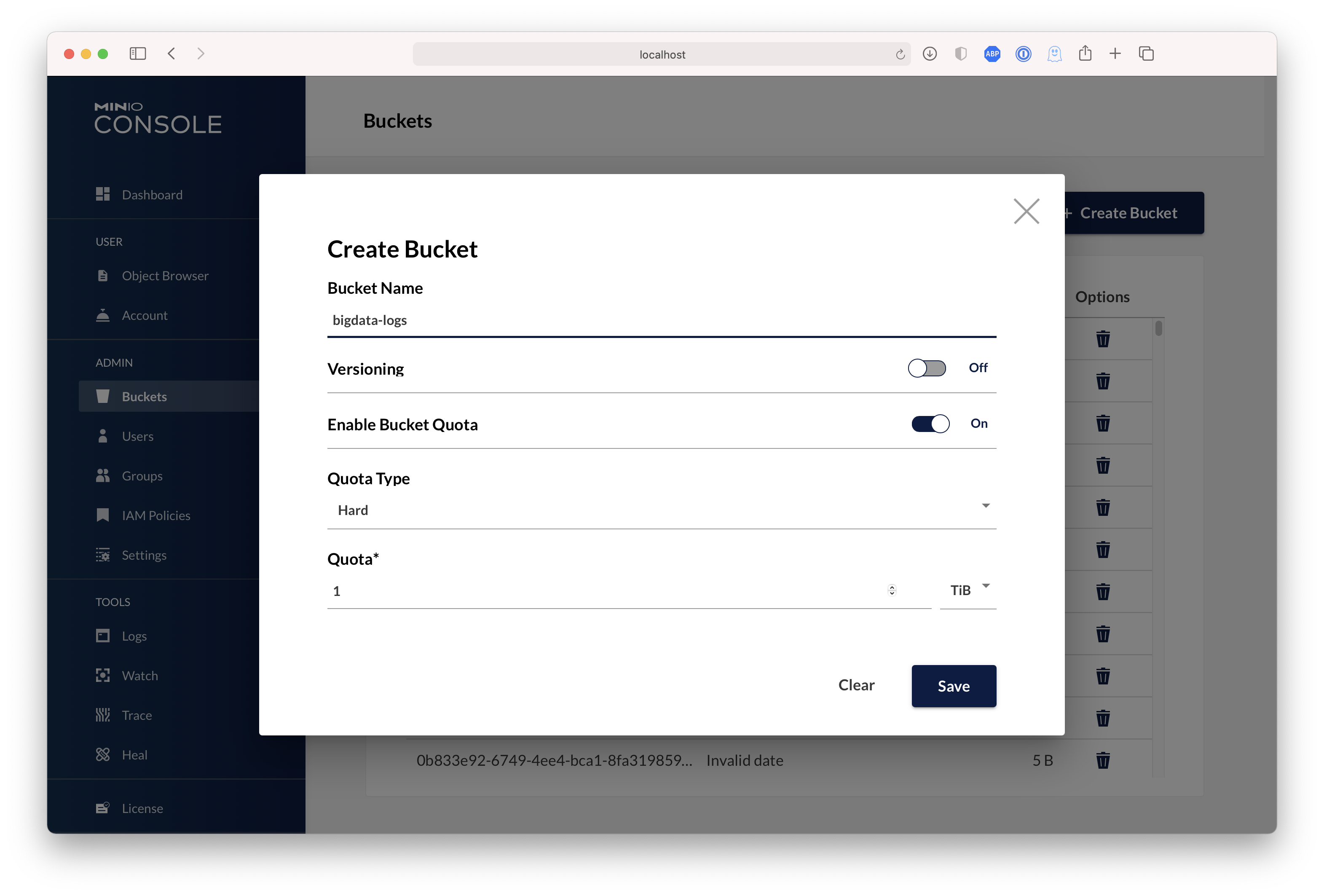

| Dashboard | Creating a bucket |

|

||||

| ------------- | ------------- |

|

||||

|  |  |

|

||||

|

||||

## Test using MinIO Client `mc`

|

||||

|

||||

`mc` provides a modern alternative to UNIX commands like ls, cat, cp, mirror, diff etc. It supports filesystems and Amazon S3 compatible cloud storage services. Follow the MinIO Client [Quickstart Guide](https://docs.min.io/docs/minio-client-quickstart-guide) for further instructions.

|

||||

|

||||

# Upgrading MinIO

|

||||

|

||||

Upgrades require zero downtime in MinIO, all upgrades are non-disruptive, all transactions on MinIO are atomic. So upgrading all the servers simultaneously is the recommended way to upgrade MinIO.

|

||||

|

||||

> NOTE: requires internet access to update directly from https://dl.min.io, optionally you can host any mirrors at https://my-artifactory.example.com/minio/

|

||||

> NOTE: requires internet access to update directly from <https://dl.min.io>, optionally you can host any mirrors at <https://my-artifactory.example.com/minio/>

|

||||

|

||||

- For deployments that installed the MinIO server binary by hand, use [`mc admin update`](https://docs.min.io/minio/baremetal/reference/minio-mc-admin/mc-admin-update.html)

|

||||

```

|

||||

|

||||

```sh

|

||||

mc admin update <minio alias, e.g., myminio>

|

||||

```

|

||||

|

||||

- For deployments without external internet access (e.g. airgapped environments), download the binary from https://dl.min.io and replace the existing MinIO binary let's say for example `/opt/bin/minio`, apply executable permissions `chmod +x /opt/bin/minio` and do `mc admin service restart alias/`.

|

||||

- For deployments without external internet access (e.g. airgapped environments), download the binary from <https://dl.min.io> and replace the existing MinIO binary let's say for example `/opt/bin/minio`, apply executable permissions `chmod +x /opt/bin/minio` and do `mc admin service restart alias/`.

|

||||

|

||||

- For RPM/DEB installations, upgrade packages **parallelly** on all servers. Once upgraded, perform `systemctl restart minio` across all nodes in **parallel**. RPM/DEB based installations are usually automated using [`ansible`](https://github.com/minio/ansible-minio).

|

||||

|

||||

## Upgrade Checklist

|

||||

|

||||

- Test all upgrades in a lower environment (DEV, QA, UAT) before applying to production. Performing blind upgrades in production environments carries significant risk.

|

||||

- Read the release notes for the targeted MinIO release *before* performing any installation, there is no forced requirement to upgrade to latest releases every week. If it has a bug fix you are looking for then yes, else avoid actively upgrading a running production system.

|

||||

- Make sure MinIO process has write access to `/opt/bin` if you plan to use `mc admin update`. This is needed for MinIO to download the latest binary from https://dl.min.io and save it locally for upgrades.

|

||||

- Make sure MinIO process has write access to `/opt/bin` if you plan to use `mc admin update`. This is needed for MinIO to download the latest binary from <https://dl.min.io> and save it locally for upgrades.

|

||||

- `mc admin update` is not supported in kubernetes/container environments, container environments provide their own mechanisms for container updates.

|

||||

- **We do not recommend upgrading one MinIO server at a time, the product is designed to support parallel upgrades please follow our recommended guidelines.**

|

||||

|

||||

# Explore Further

|

||||

|

||||

- [MinIO Erasure Code QuickStart Guide](https://docs.min.io/docs/minio-erasure-code-quickstart-guide)

|

||||

- [Use `mc` with MinIO Server](https://docs.min.io/docs/minio-client-quickstart-guide)

|

||||

- [Use `aws-cli` with MinIO Server](https://docs.min.io/docs/aws-cli-with-minio)

|

||||

|

|

@ -256,9 +264,11 @@ mc admin update <minio alias, e.g., myminio>

|

|||

- [The MinIO documentation website](https://docs.min.io)

|

||||

|

||||

# Contribute to MinIO Project

|

||||

|

||||

Please follow MinIO [Contributor's Guide](https://github.com/minio/minio/blob/master/CONTRIBUTING.md)

|

||||

|

||||

# License

|

||||

|

||||

- MinIO source is licensed under the GNU AGPLv3 license that can be found in the [LICENSE](https://github.com/minio/minio/blob/master/LICENSE) file.

|

||||

- MinIO [Documentation](https://github.com/minio/minio/tree/master/docs) © 2021 by MinIO, Inc is licensed under [CC BY 4.0](https://creativecommons.org/licenses/by/4.0/).

|

||||

- [License Compliance](https://github.com/minio/minio/blob/master/COMPLIANCE.md)

|

||||

|

|

|

|||

|

|

@ -18,9 +18,10 @@ you need access credentials for a successful exploit).

|

|||

|

||||

If you have not received a reply to your email within 48 hours or you have not heard from the security team

|

||||

for the past five days please contact the security team directly:

|

||||

- Primary security coordinator: aead@min.io

|

||||

- Secondary coordinator: harsha@min.io

|

||||

- If you receive no response: dev@min.io

|

||||

|

||||

- Primary security coordinator: aead@min.io

|

||||

- Secondary coordinator: harsha@min.io

|

||||

- If you receive no response: dev@min.io

|

||||

|

||||

### Disclosure Process

|

||||

|

||||

|

|

@ -32,7 +33,7 @@ MinIO uses the following disclosure process:

|

|||

If the report is rejected the response explains why.

|

||||

3. Code is audited to find any potential similar problems.

|

||||

4. Fixes are prepared for the latest release.

|

||||

5. On the date that the fixes are applied a security advisory will be published on https://blog.min.io.

|

||||

5. On the date that the fixes are applied a security advisory will be published on <https://blog.min.io>.

|

||||

Please inform us in your report email whether MinIO should mention your contribution w.r.t. fixing

|

||||

the security issue. By default MinIO will **not** publish this information to protect your privacy.

|

||||

|

||||

|

|

|

|||

|

|

@ -1,11 +1,11 @@

|

|||

## Vulnerability Management Policy

|

||||

# Vulnerability Management Policy

|

||||

|

||||

This document formally describes the process of addressing and managing a

|

||||

reported vulnerability that has been found in the MinIO server code base,

|

||||

any directly connected ecosystem component or a direct / indirect dependency

|

||||

of the code base.

|

||||

|

||||

### Scope

|

||||

## Scope

|

||||

|

||||

The vulnerability management policy described in this document covers the

|

||||

process of investigating, assessing and resolving a vulnerability report

|

||||

|

|

@ -14,13 +14,13 @@ opened by a MinIO employee or an external third party.

|

|||

Therefore, it lists pre-conditions and actions that should be performed to

|

||||

resolve and fix a reported vulnerability.

|

||||

|

||||

### Vulnerability Management Process

|

||||

## Vulnerability Management Process

|

||||

|

||||

The vulnerability management process requires that the vulnerability report

|

||||

contains the following information:

|

||||

|

||||

- The project / component that contains the reported vulnerability.

|

||||

- A description of the vulnerability. In particular, the type of the

|

||||

- The project / component that contains the reported vulnerability.

|

||||

- A description of the vulnerability. In particular, the type of the

|

||||

reported vulnerability and how it might be exploited. Alternatively,

|

||||

a well-established vulnerability identifier, e.g. CVE number, can be

|

||||

used instead.

|

||||

|

|

@ -28,12 +28,11 @@ contains the following information:

|

|||

Based on the description mentioned above, a MinIO engineer or security team

|

||||

member investigates:

|

||||

|

||||

- Whether the reported vulnerability exists.

|

||||

- The conditions that are required such that the vulnerability can be exploited.

|

||||

- The steps required to fix the vulnerability.

|

||||

- Whether the reported vulnerability exists.

|

||||

- The conditions that are required such that the vulnerability can be exploited.

|

||||

- The steps required to fix the vulnerability.

|

||||

|

||||

In general, if the vulnerability exists in one of the MinIO code bases

|

||||

itself - not in a code dependency - then MinIO will, if possible, fix

|

||||

the vulnerability or implement reasonable countermeasures such that the

|

||||

vulnerability cannot be exploited anymore.

|

||||

|

||||

|

|

|

|||

|

|

@ -12,12 +12,12 @@ MinIO also supports multi-cluster, multi-site federation similar to AWS regions

|

|||

|

||||

## **2. Prerequisites**

|

||||

|

||||

* Install Hortonworks Distribution using this [guide.](https://docs.hortonworks.com/HDPDocuments/Ambari-2.7.1.0/bk_ambari-installation/content/ch_Installing_Ambari.html)

|

||||

* [Setup Ambari](https://docs.hortonworks.com/HDPDocuments/Ambari-2.7.1.0/bk_ambari-installation/content/set_up_the_ambari_server.html) which automatically sets up YARN

|

||||

* [Installing Spark](https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.0.1/installing-spark/content/installing_spark.html)

|

||||

* Install MinIO Distributed Server using one of the guides below.

|

||||

* [Deployment based on Kubernetes](https://docs.min.io/docs/deploy-minio-on-kubernetes.html#minio-distributed-server-deployment)

|

||||

* [Deployment based on MinIO Helm Chart](https://github.com/helm/charts/tree/master/stable/minio)

|

||||

- Install Hortonworks Distribution using this [guide.](https://docs.hortonworks.com/HDPDocuments/Ambari-2.7.1.0/bk_ambari-installation/content/ch_Installing_Ambari.html)

|

||||

- [Setup Ambari](https://docs.hortonworks.com/HDPDocuments/Ambari-2.7.1.0/bk_ambari-installation/content/set_up_the_ambari_server.html) which automatically sets up YARN

|

||||

- [Installing Spark](https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.0.1/installing-spark/content/installing_spark.html)

|

||||

- Install MinIO Distributed Server using one of the guides below.

|

||||

- [Deployment based on Kubernetes](https://docs.min.io/docs/deploy-minio-on-kubernetes.html#minio-distributed-server-deployment)

|

||||

- [Deployment based on MinIO Helm Chart](https://github.com/helm/charts/tree/master/stable/minio)

|

||||

|

||||

## **3. Configure Hadoop, Spark, Hive to use MinIO**

|

||||

|

||||

|

|

@ -37,10 +37,10 @@ Navigate to **Custom core-site** to configure MinIO parameters for `_s3a_` conne

|

|||

|

||||

```

|

||||

sudo pip install yq

|

||||

alias kv-pairify='xq ".configuration[]" | jq ".[]" | jq -r ".name + \"=\" + .value"'

|

||||

alias kv-pairify='yq ".configuration[]" | jq ".[]" | jq -r ".name + \"=\" + .value"'

|

||||

```

|

||||

|

||||

Let's take for example a set of 12 compute nodes with an aggregate memory of *1.2TiB*, we need to do following settings for optimal results. Add the following optimal entries for _core-site.xml_ to configure _s3a_ with **MinIO**. Most important options here are

|

||||

Let's take for example a set of 12 compute nodes with an aggregate memory of _1.2TiB_, we need to do following settings for optimal results. Add the following optimal entries for _core-site.xml_ to configure _s3a_ with **MinIO**. Most important options here are

|

||||

|

||||

```

|

||||

cat ${HADOOP_CONF_DIR}/core-site.xml | kv-pairify | grep "mapred"

|

||||

|

|

@ -56,7 +56,7 @@ mapreduce.task.io.sort.factor=999 # Threshold before writing to disk

|

|||

mapreduce.task.sort.spill.percent=0.9 # Minimum % before spilling to disk

|

||||

```

|

||||

|

||||

S3A is the connector to use S3 and other S3-compatible object stores such as MinIO. MapReduce workloads typically interact with object stores in the same way they do with HDFS. These workloads rely on HDFS atomic rename functionality to complete writing data to the datastore. Object storage operations are atomic by nature and they do not require/implement rename API. The default S3A committer emulates renames through copy and delete APIs. This interaction pattern causes significant loss of performance because of the write amplification. *Netflix*, for example, developed two new staging committers - the Directory staging committer and the Partitioned staging committer - to take full advantage of native object storage operations. These committers do not require rename operation. The two staging committers were evaluated, along with another new addition called the Magic committer for benchmarking.

|

||||

S3A is the connector to use S3 and other S3-compatible object stores such as MinIO. MapReduce workloads typically interact with object stores in the same way they do with HDFS. These workloads rely on HDFS atomic rename functionality to complete writing data to the datastore. Object storage operations are atomic by nature and they do not require/implement rename API. The default S3A committer emulates renames through copy and delete APIs. This interaction pattern causes significant loss of performance because of the write amplification. _Netflix_, for example, developed two new staging committers - the Directory staging committer and the Partitioned staging committer - to take full advantage of native object storage operations. These committers do not require rename operation. The two staging committers were evaluated, along with another new addition called the Magic committer for benchmarking.

|

||||

|

||||

It was found that the directory staging committer was the fastest among the three, S3A connector should be configured with the following parameters for optimal results:

|

||||

|

||||

|

|

@ -95,8 +95,8 @@ fs.s3a.threads.max=2048 # Maximum number of threads for S3A

|

|||

|

||||

The rest of the other optimization options are discussed in the links below

|

||||

|

||||

* [https://hadoop.apache.org/docs/current/hadoop-aws/tools/hadoop-aws/index.html](https://hadoop.apache.org/docs/current/hadoop-aws/tools/hadoop-aws/index.html)

|

||||

* [https://hadoop.apache.org/docs/r3.1.1/hadoop-aws/tools/hadoop-aws/committers.html](https://hadoop.apache.org/docs/r3.1.1/hadoop-aws/tools/hadoop-aws/committers.html)

|

||||

- [https://hadoop.apache.org/docs/current/hadoop-aws/tools/hadoop-aws/index.html](https://hadoop.apache.org/docs/current/hadoop-aws/tools/hadoop-aws/index.html)

|

||||

- [https://hadoop.apache.org/docs/r3.1.1/hadoop-aws/tools/hadoop-aws/committers.html](https://hadoop.apache.org/docs/r3.1.1/hadoop-aws/tools/hadoop-aws/committers.html)

|

||||

|

||||

Once the config changes are applied, proceed to restart **Hadoop** services.

|

||||

|

||||

|

|

@ -187,16 +187,16 @@ Test the Spark installation by running the following compute intensive example,

|

|||

|

||||

Follow these steps to run the Spark Pi example:

|

||||

|

||||

* Login as user **‘spark’**.

|

||||

* When the job runs, the library can now use **MinIO** during intermediate processing.

|

||||

* Navigate to a node with the Spark client and access the spark2-client directory:

|

||||

- Login as user **‘spark’**.

|

||||

- When the job runs, the library can now use **MinIO** during intermediate processing.

|

||||

- Navigate to a node with the Spark client and access the spark2-client directory:

|

||||

|

||||

```

|

||||

cd /usr/hdp/current/spark2-client

|

||||

su spark

|

||||

```

|

||||

|

||||

* Run the Apache Spark Pi job in yarn-client mode, using code from **org.apache.spark**:

|

||||

- Run the Apache Spark Pi job in yarn-client mode, using code from **org.apache.spark**:

|

||||

|

||||

```

|

||||

./bin/spark-submit --class org.apache.spark.examples.SparkPi \

|

||||

|

|

@ -223,9 +223,9 @@ WordCount is a simple program that counts how often a word occurs in a text file

|

|||

|

||||

The following example submits WordCount code to the Scala shell. Select an input file for the Spark WordCount example. We can use any text file as input.

|

||||

|

||||

* Login as user **‘spark’**.

|

||||

* When the job runs, the library can now use **MinIO** during intermediate processing.

|

||||

* Navigate to a node with Spark client and access the spark2-client directory:

|

||||

- Login as user **‘spark’**.

|

||||

- When the job runs, the library can now use **MinIO** during intermediate processing.

|

||||

- Navigate to a node with Spark client and access the spark2-client directory:

|

||||

|

||||

```

|

||||

cd /usr/hdp/current/spark2-client

|

||||

|

|

@ -269,7 +269,7 @@ Type :help for more information.

|

|||

scala>

|

||||

```

|

||||

|

||||

* At the _scala>_ prompt, submit the job by typing the following commands, Replace node names, file name, and file location with your values:

|

||||

- At the _scala>_ prompt, submit the job by typing the following commands, Replace node names, file name, and file location with your values:

|

||||

|

||||

```

|

||||

scala> val file = sc.textFile("s3a://testbucket/testdata")

|

||||

|

|

|

|||

|

|

@ -6,11 +6,13 @@ Transition tiers can be added to MinIO using `mc admin tier add` command to asso

|

|||

Lifecycle transition rules can be applied to buckets (both versioned and un-versioned) by specifying the tier name defined above as the transition storage class for the lifecycle rule.

|

||||

|

||||

## Implementation

|

||||

|

||||

ILM tiering takes place when a object placed in the bucket meets lifecycle transition rules and becomes eligible for tiering. MinIO scanner (which runs at one minute intervals, each time scanning one sixteenth of the namespace), picks up the object for tiering. The data is moved to the remote tier in entirety, leaving only the object metadata on MinIO.

|

||||

|

||||

The data on the backend is stored under the `bucket/prefix` specified in the tier configuration with a custom name derived from a randomly generated uuid - e.g. `0b/c4/0bc4fab7-2daf-4d2f-8e39-5c6c6fb7e2d3`. The first two prefixes are characters 1-2,3-4 from the uuid. This format allows tiering to any cloud irrespective of whether the cloud in question supports versioning. The reference to the transitioned object name and transitioned tier is stored as part of the internal metadata for the object (or its version) on MinIO.

|

||||

|

||||

Extra metadata maintained internally in `xl.meta` for a transitioned object

|

||||

|

||||

```

|

||||

...

|

||||

"MetaSys": {

|

||||

|

|

@ -21,6 +23,7 @@ Extra metadata maintained internally in `xl.meta` for a transitioned object

|

|||

```

|

||||

|

||||

When a transitioned object is restored temporarily to local MinIO instance via PostRestoreObject API, the object data is copied back from the remote tier, and additional metadata for the restored object is maintained as referenced below. Once the restore period expires, the local copy of the object is removed by the scanner during its periodic runs.

|

||||

|

||||

```

|

||||

...

|

||||

"MetaUsr": {

|

||||

|

|

@ -29,16 +32,24 @@ When a transitioned object is restored temporarily to local MinIO instance via P

|

|||

"x-amz-restore": "ongoing-request=false, expiry-date=Sat, 27 Feb 2021 00:00:00 GMT",

|

||||

...

|

||||

```

|

||||

|

||||

### Encrypted/Object locked objects

|

||||

|

||||

For objects under SSE-S3 or SSE-C encryption, the encrypted content from MinIO cluster is copied as is to the remote tier without any decryption. The content is decrypted as it is streamed from remote tier on `GET/HEAD`. Objects under retention are protected because the metadata present on MinIO server ensures that the object (version) is not deleted until retention period is over. Administrators need to ensure that the remote tier bucket is under proper access control.

|

||||

|

||||

### Transition Status

|

||||

|

||||

MinIO specific extension header `X-Minio-Transition` is displayed on `HEAD/GET` to predict expected transition date on the object. Once object is transitioned to the remote tier,`x-amz-storage-class` shows the tier name to which object transitioned. Additional headers such as "X-Amz-Restore-Expiry-Days", "x-amz-restore", and "X-Amz-Restore-Request-Date" are displayed when a object is under restore/has been restored to local MinIO cluster.

|

||||

|

||||

### Expiry or removal events

|

||||

|

||||

An object that is in transition tier will be deleted once the object hits expiry date or if removed via `mc rm` (`mc rm --vid` in the case of delete of a specific object version). Other rules specific to legal hold and object locking precede any lifecycle rules.

|

||||

|

||||

### Additional notes

|

||||

|

||||

Tiering and lifecycle transition are applicable only to erasure/distributed MinIO.

|

||||

|

||||

## Explore Further

|

||||

|

||||

- [MinIO | Golang Client API Reference](https://docs.min.io/docs/golang-client-api-reference.html#SetBucketLifecycle)

|

||||

- [Object Lifecycle Management](https://docs.aws.amazon.com/AmazonS3/latest/dev/object-lifecycle-mgmt.html)

|

||||

|

|

|

|||

|

|

@ -3,6 +3,7 @@

|

|||

Enable object lifecycle configuration on buckets to setup automatic deletion of objects after a specified number of days or a specified date.

|

||||

|

||||

## 1. Prerequisites

|

||||

|

||||

- Install MinIO - [MinIO Quickstart Guide](https://docs.min.io/docs/minio-quickstart-guide).

|

||||

- Install `mc` - [mc Quickstart Guide](https://docs.minio.io/docs/minio-client-quickstart-guide.html)

|

||||

|

||||

|

|

@ -45,6 +46,7 @@ Lifecycle configuration imported successfully to `play/testbucket`.

|

|||

```

|

||||

|

||||

- List the current settings

|

||||

|

||||

```

|

||||

$ mc ilm ls play/testbucket

|

||||

ID | Prefix | Enabled | Expiry | Date/Days | Transition | Date/Days | Storage-Class | Tags

|

||||

|

|

@ -64,6 +66,7 @@ This will only work with a versioned bucket, take a look at [Bucket Versioning G

|

|||

A non-current object version is a version which is not the latest for a given object. It is possible to set up an automatic removal of non-current versions when a version becomes older than a given number of days.

|

||||

|

||||

e.g., To scan objects stored under `user-uploads/` prefix and remove versions older than one year.

|

||||

|

||||

```

|

||||

{

|

||||

"Rules": [

|

||||

|

|

@ -81,12 +84,12 @@ e.g., To scan objects stored under `user-uploads/` prefix and remove versions ol

|

|||

}

|

||||

```

|

||||

|

||||

|

||||

### 3.2 Automatic removal of noncurrent versions keeping only most recent ones after noncurrent days

|

||||

|

||||

It is possible to configure automatic removal of older noncurrent versions keeping only the most recent `N` using `NewerNoncurrentVersions`.

|

||||

|

||||

e.g, To remove noncurrent versions of all objects keeping the most recent 5 noncurrent versions under the prefix `user-uploads/` 30 days after they become noncurrent ,

|

||||

|

||||

```

|

||||

{

|

||||

"Rules": [

|

||||

|

|

@ -126,6 +129,7 @@ of objects under the prefix `user-uploads/` as soon as there are more than `N` n

|

|||

]

|

||||

}

|

||||

```

|

||||

|

||||

Note: This rule has an implicit zero NoncurrentDays, which makes the expiry of those 'extra' noncurrent versions immediate.

|

||||

|

||||

### 3.3 Automatic removal of delete markers with no other versions

|

||||

|

|

@ -145,6 +149,7 @@ When an object has only one version as a delete marker, the latter can be automa

|

|||

]

|

||||

}

|

||||

```

|

||||

|

||||

## 4. Enable ILM transition feature

|

||||

|

||||

In Erasure mode, MinIO supports tiering to public cloud providers such as GCS, AWS and Azure as well as to other MinIO clusters via the ILM transition feature. This will allow transitioning of older objects to a different cluster or the public cloud by setting up transition rules in the bucket lifecycle configuration. This feature enables applications to optimize storage costs by moving less frequently accessed data to a cheaper storage without compromising accessibility of data.

|

||||

|

|

@ -156,14 +161,17 @@ To transition objects in a bucket to a destination bucket on a different cluster

|

|||

```

|

||||

mc admin tier add azure source AZURETIER --endpoint https://blob.core.windows.net --access-key AZURE_ACCOUNT_NAME --secret-key AZURE_ACCOUNT_KEY --bucket azurebucket --prefix testprefix1/

|

||||

```

|

||||

|

||||

> The admin user running this command needs the "admin:SetTier" and "admin:ListTier" permissions if not running as root.

|

||||

|

||||

Using above tier, set up a lifecycle rule with transition:

|

||||

|

||||

```

|

||||

mc ilm add --expiry-days 365 --transition-days 45 --storage-class "AZURETIER" myminio/srcbucket

|

||||

```

|

||||

|

||||

Note: In the case of S3, it is possible to create a tier from MinIO running in EC2 to S3 using AWS role attached to EC2 as credentials instead of accesskey/secretkey:

|

||||

|

||||

```

|

||||

mc admin tier add s3 source S3TIER --bucket s3bucket --prefix testprefix/ --use-aws-role

|

||||

```

|

||||

|

|

@ -177,10 +185,12 @@ aws s3api restore-object --bucket srcbucket \

|

|||

```

|

||||

|

||||

### 4.1 Monitoring transition events

|

||||

|

||||

`s3:ObjectTransition:Complete` and `s3:ObjectTransition:Failed` events can be used to monitor transition events between the source cluster and transition tier. To watch lifecycle events, you can enable bucket notification on the source bucket with `mc event add` and specify `--event ilm` flag.

|

||||

|

||||

Note that transition event notification is a MinIO extension.

|

||||

|

||||

## Explore Further

|

||||

|

||||

- [MinIO | Golang Client API Reference](https://docs.min.io/docs/golang-client-api-reference.html#SetBucketLifecycle)

|

||||

- [Object Lifecycle Management](https://docs.aws.amazon.com/AmazonS3/latest/dev/object-lifecycle-mgmt.html)

|

||||

|

|

|

|||

|

|

@ -32,7 +32,6 @@ Various event types supported by MinIO server are

|

|||

| `s3:BucketCreated` |

|

||||

| `s3:BucketRemoved` |

|

||||

|

||||

|

||||

Use client tools like `mc` to set and listen for event notifications using the [`event` sub-command](https://docs.min.io/docs/minio-client-complete-guide#events). MinIO SDK's [`BucketNotification` APIs](https://docs.min.io/docs/golang-client-api-reference#SetBucketNotification) can also be used. The notification message MinIO sends to publish an event is a JSON message with the following [structure](https://docs.aws.amazon.com/AmazonS3/latest/dev/notification-content-structure.html).

|

||||

|

||||

Bucket events can be published to the following targets:

|

||||

|

|

@ -64,12 +63,11 @@ notify_redis publish bucket notifications to Redis datastores

|

|||

```

|

||||

|

||||

> NOTE:

|

||||

>

|

||||

> - '\*' at the end of arg means its mandatory.

|

||||

> - '\*' at the end of the values, means its the default value for the arg.

|

||||

> - When configured using environment variables, the `:name` can be specified using this format `MINIO_NOTIFY_WEBHOOK_ENABLE_<name>`.

|

||||

|

||||

<a name="AMQP"></a>

|

||||

|

||||

## Publish MinIO events via AMQP

|

||||

|

||||

Install RabbitMQ from [here](https://www.rabbitmq.com/).

|

||||

|

|

@ -135,7 +133,7 @@ Use `mc admin config set` command to update the configuration for the deployment

|

|||

An example configuration for RabbitMQ is shown below:

|

||||

|

||||

```sh

|

||||

$ mc admin config set myminio/ notify_amqp:1 exchange="bucketevents" exchange_type="fanout" mandatory="false" no_wait="false" url="amqp://myuser:mypassword@localhost:5672" auto_deleted="false" delivery_mode="0" durable="false" internal="false" routing_key="bucketlogs"

|

||||

mc admin config set myminio/ notify_amqp:1 exchange="bucketevents" exchange_type="fanout" mandatory="false" no_wait="false" url="amqp://myuser:mypassword@localhost:5672" auto_deleted="false" delivery_mode="0" durable="false" internal="false" routing_key="bucketlogs"

|

||||

```

|

||||

|

||||

MinIO supports all the exchanges available in [RabbitMQ](https://www.rabbitmq.com/). For this setup, we are using `fanout` exchange.

|

||||

|

|

@ -144,7 +142,7 @@ MinIO also sends with the notifications two headers: `minio-bucket` and `minio-e

|

|||

|

||||

Note that, you can add as many AMQP server endpoint configurations as needed by providing an identifier (like "1" in the example above) for the AMQP instance and an object of per-server configuration parameters.

|

||||

|

||||

### Step 2: Enable bucket notification using MinIO client

|

||||

### Step 2: Enable RabbitMQ bucket notification using MinIO client

|

||||

|

||||

We will enable bucket event notification to trigger whenever a JPEG image is uploaded or deleted `images` bucket on `myminio` server. Here ARN value is `arn:minio:sqs::1:amqp`. To understand more about ARN please follow [AWS ARN](http://docs.aws.amazon.com/general/latest/gr/aws-arns-and-namespaces.html) documentation.

|

||||

|

||||

|

|

@ -207,8 +205,6 @@ python rabbit.py

|

|||

'{"Records":[{"eventVersion":"2.0","eventSource":"aws:s3","awsRegion":"","eventTime":"2016–09–08T22:34:38.226Z","eventName":"s3:ObjectCreated:Put","userIdentity":{"principalId":"minio"},"requestParameters":{"sourceIPAddress":"10.1.10.150:44576"},"responseElements":{},"s3":{"s3SchemaVersion":"1.0","configurationId":"Config","bucket":{"name":"images","ownerIdentity":{"principalId":"minio"},"arn":"arn:aws:s3:::images"},"object":{"key":"myphoto.jpg","size":200436,"sequencer":"147279EAF9F40933"}}}],"level":"info","msg":"","time":"2016–09–08T15:34:38–07:00"}'

|

||||

```

|

||||

|

||||

<a name="MQTT"></a>

|

||||

|

||||

## Publish MinIO events MQTT

|

||||

|

||||

Install an MQTT Broker from [here](https://mosquitto.org/).

|

||||

|

|

@ -266,14 +262,14 @@ notify_mqtt:1 broker="" password="" queue_dir="" queue_limit="0" reconnect_inter

|

|||

Use `mc admin config set` command to update the configuration for the deployment. Restart the MinIO server to put the changes into effect. The server will print a line like `SQS ARNs: arn:minio:sqs::1:mqtt` at start-up if there were no errors.

|

||||

|

||||

```sh

|

||||

$ mc admin config set myminio notify_mqtt:1 broker="tcp://localhost:1883" password="" queue_dir="" queue_limit="0" reconnect_interval="0s" keep_alive_interval="0s" qos="1" topic="minio" username=""

|

||||

mc admin config set myminio notify_mqtt:1 broker="tcp://localhost:1883" password="" queue_dir="" queue_limit="0" reconnect_interval="0s" keep_alive_interval="0s" qos="1" topic="minio" username=""

|

||||

```

|

||||

|

||||

MinIO supports any MQTT server that supports MQTT 3.1 or 3.1.1 and can connect to them over TCP, TLS, or a Websocket connection using `tcp://`, `tls://`, or `ws://` respectively as the scheme for the broker url. See the [Go Client](http://www.eclipse.org/paho/clients/golang/) documentation for more information.

|

||||

|

||||

Note that, you can add as many MQTT server endpoint configurations as needed by providing an identifier (like "1" in the example above) for the MQTT instance and an object of per-server configuration parameters.

|

||||

|

||||

### Step 2: Enable bucket notification using MinIO client

|

||||

### Step 2: Enable MQTT bucket notification using MinIO client

|

||||

|

||||

We will enable bucket event notification to trigger whenever a JPEG image is uploaded or deleted `images` bucket on `myminio` server. Here ARN value is `arn:minio:sqs::1:mqtt`.

|

||||

|

||||

|

|

@ -332,8 +328,6 @@ python mqtt.py

|

|||

{“Records”:[{“eventVersion”:”2.0",”eventSource”:”aws:s3",”awsRegion”:”",”eventTime”:”2016–09–08T22:34:38.226Z”,”eventName”:”s3:ObjectCreated:Put”,”userIdentity”:{“principalId”:”minio”},”requestParameters”:{“sourceIPAddress”:”10.1.10.150:44576"},”responseElements”:{},”s3":{“s3SchemaVersion”:”1.0",”configurationId”:”Config”,”bucket”:{“name”:”images”,”ownerIdentity”:{“principalId”:”minio”},”arn”:”arn:aws:s3:::images”},”object”:{“key”:”myphoto.jpg”,”size”:200436,”sequencer”:”147279EAF9F40933"}}}],”level”:”info”,”msg”:””,”time”:”2016–09–08T15:34:38–07:00"}

|

||||

```

|

||||

|

||||

<a name="Elasticsearch"></a>

|

||||

|

||||

## Publish MinIO events via Elasticsearch

|

||||

|

||||

Install [Elasticsearch](https://www.elastic.co/downloads/elasticsearch) server.

|

||||

|

|

@ -346,7 +340,7 @@ When the _access_ format is used, MinIO appends events as documents in an Elasti

|

|||

|

||||

The steps below show how to use this notification target in `namespace` format. The other format is very similar and is omitted for brevity.

|

||||

|

||||

### Step 1: Ensure minimum requirements are met

|

||||

### Step 1: Ensure Elasticsearch minimum requirements are met

|

||||

|

||||

MinIO requires a 5.x series version of Elasticsearch. This is the latest major release series. Elasticsearch provides version upgrade migration guidelines [here](https://www.elastic.co/guide/en/elasticsearch/reference/current/setup-upgrade.html).

|

||||

|

||||

|

|

@ -403,12 +397,12 @@ notify_elasticsearch:1 queue_limit="0" url="" format="namespace" index="" queue

|

|||

Use `mc admin config set` command to update the configuration for the deployment. Restart the MinIO server to put the changes into effect. The server will print a line like `SQS ARNs: arn:minio:sqs::1:elasticsearch` at start-up if there were no errors.

|

||||

|

||||

```sh

|

||||

$ mc admin config set myminio notify_elasticsearch:1 queue_limit="0" url="http://127.0.0.1:9200" format="namespace" index="minio_events" queue_dir="" username="" password=""

|

||||

mc admin config set myminio notify_elasticsearch:1 queue_limit="0" url="http://127.0.0.1:9200" format="namespace" index="minio_events" queue_dir="" username="" password=""

|

||||

```

|

||||

|

||||

Note that, you can add as many Elasticsearch server endpoint configurations as needed by providing an identifier (like "1" in the example above) for the Elasticsearch instance and an object of per-server configuration parameters.

|

||||

|

||||

### Step 3: Enable bucket notification using MinIO client

|

||||

### Step 3: Enable Elastichsearch bucket notification using MinIO client

|

||||

|

||||

We will now enable bucket event notifications on a bucket named `images`. Whenever a JPEG image is created/overwritten, a new document is added or an existing document is updated in the Elasticsearch index configured above. When an existing object is deleted, the corresponding document is deleted from the index. Thus, the rows in the Elasticsearch index, reflect the `.jpg` objects in the `images` bucket.

|

||||

|

||||

|

|

@ -505,8 +499,6 @@ This output shows that a document has been created for the event in Elasticsearc

|

|||

|

||||

Here we see that the document ID is the bucket and object name. In case `access` format was used, the document ID would be automatically generated by Elasticsearch.

|

||||

|

||||

<a name="Redis"></a>

|

||||

|

||||

## Publish MinIO events via Redis

|

||||

|

||||

Install [Redis](http://redis.io/download) server. For illustrative purposes, we have set the database password as "yoursecret".

|

||||

|

|

@ -565,12 +557,12 @@ notify_redis:1 address="" format="namespace" key="" password="" queue_dir="" que

|

|||

Use `mc admin config set` command to update the configuration for the deployment.Restart the MinIO server to put the changes into effect. The server will print a line like `SQS ARNs: arn:minio:sqs::1:redis` at start-up if there were no errors.

|

||||

|

||||

```sh

|

||||

$ mc admin config set myminio/ notify_redis:1 address="127.0.0.1:6379" format="namespace" key="bucketevents" password="yoursecret" queue_dir="" queue_limit="0"

|

||||

mc admin config set myminio/ notify_redis:1 address="127.0.0.1:6379" format="namespace" key="bucketevents" password="yoursecret" queue_dir="" queue_limit="0"

|

||||

```

|

||||

|

||||

Note that, you can add as many Redis server endpoint configurations as needed by providing an identifier (like "1" in the example above) for the Redis instance and an object of per-server configuration parameters.

|

||||

|

||||

### Step 2: Enable bucket notification using MinIO client

|

||||

### Step 2: Enable Redis bucket notification using MinIO client

|

||||

|

||||

We will now enable bucket event notifications on a bucket named `images`. Whenever a JPEG image is created/overwritten, a new key is added or an existing key is updated in the Redis hash configured above. When an existing object is deleted, the corresponding key is deleted from the Redis hash. Thus, the rows in the Redis hash, reflect the `.jpg` objects in the `images` bucket.

|

||||

|

||||

|

|

@ -614,8 +606,6 @@ Here we see that MinIO performed `HSET` on `minio_events` key.

|

|||

|

||||

In case, `access` format was used, then `minio_events` would be a list, and the MinIO server would have performed an `RPUSH` to append to the list. A consumer of this list would ideally use `BLPOP` to remove list items from the left-end of the list.

|

||||

|

||||

<a name="NATS"></a>

|

||||

|

||||

## Publish MinIO events via NATS

|

||||

|

||||

Install NATS from [here](http://nats.io/).

|

||||

|

|

@ -650,6 +640,7 @@ comment (sentence) optionally add a comment to this s

|

|||

```

|

||||

|

||||

or environment variables

|

||||

|

||||

```

|

||||

KEY:

|

||||

notify_nats[:name] publish bucket notifications to NATS endpoints

|

||||

|

|

@ -686,14 +677,14 @@ notify_nats:1 password="yoursecret" streaming_max_pub_acks_in_flight="10" subjec

|

|||

Use `mc admin config set` command to update the configuration for the deployment.Restart MinIO server to reflect config changes. `bucketevents` is the subject used by NATS in this example.

|

||||

|

||||

```sh

|

||||

$ mc admin config set myminio notify_nats:1 password="yoursecret" streaming_max_pub_acks_in_flight="10" subject="" address="0.0.0.0:4222" token="" username="yourusername" ping_interval="0" queue_limit="0" tls="off" streaming_async="on" queue_dir="" streaming_cluster_id="test-cluster" streaming_enable="on"

|

||||

mc admin config set myminio notify_nats:1 password="yoursecret" streaming_max_pub_acks_in_flight="10" subject="" address="0.0.0.0:4222" token="" username="yourusername" ping_interval="0" queue_limit="0" tls="off" streaming_async="on" queue_dir="" streaming_cluster_id="test-cluster" streaming_enable="on"

|

||||

```

|

||||

|

||||

MinIO server also supports [NATS Streaming mode](http://nats.io/documentation/streaming/nats-streaming-intro/) that offers additional functionality like `At-least-once-delivery`, and `Publisher rate limiting`. To configure MinIO server to send notifications to NATS Streaming server, update the MinIO server configuration file as follows:

|

||||

|

||||

Read more about sections `cluster_id`, `client_id` on [NATS documentation](https://github.com/nats-io/nats-streaming-server/blob/master/README.md). Section `maxPubAcksInflight` is explained [here](https://github.com/nats-io/stan.go#publisher-rate-limiting).

|

||||

|

||||

### Step 2: Enable bucket notification using MinIO client

|

||||

### Step 2: Enable NATS bucket notification using MinIO client

|

||||

|

||||

We will enable bucket event notification to trigger whenever a JPEG image is uploaded or deleted from `images` bucket on `myminio` server. Here ARN value is `arn:minio:sqs::1:nats`. To understand more about ARN please follow [AWS ARN](http://docs.aws.amazon.com/general/latest/gr/aws-arns-and-namespaces.html) documentation.

|

||||

|

||||

|

|

@ -713,28 +704,28 @@ package main

|

|||

|

||||

// Import Go and NATS packages

|

||||

import (

|

||||

"log"

|

||||

"runtime"

|

||||

"log"

|

||||

"runtime"

|

||||

|

||||

"github.com/nats-io/nats.go"

|

||||

"github.com/nats-io/nats.go"

|

||||

)

|

||||

|

||||

func main() {

|

||||

|

||||

// Create server connection

|

||||

natsConnection, _ := nats.Connect("nats://yourusername:yoursecret@localhost:4222")

|

||||

log.Println("Connected")

|

||||

// Create server connection

|

||||

natsConnection, _ := nats.Connect("nats://yourusername:yoursecret@localhost:4222")

|

||||

log.Println("Connected")

|

||||

|

||||

// Subscribe to subject

|

||||

log.Printf("Subscribing to subject 'bucketevents'\n")

|

||||

natsConnection.Subscribe("bucketevents", func(msg *nats.Msg) {

|

||||

// Subscribe to subject

|

||||

log.Printf("Subscribing to subject 'bucketevents'\n")

|

||||

natsConnection.Subscribe("bucketevents", func(msg *nats.Msg) {

|

||||

|

||||

// Handle the message

|

||||

log.Printf("Received message '%s\n", string(msg.Data)+"'")

|

||||

})

|

||||

// Handle the message

|

||||

log.Printf("Received message '%s\n", string(msg.Data)+"'")

|

||||

})

|

||||

|

||||

// Keep the connection alive

|

||||

runtime.Goexit()

|

||||

// Keep the connection alive

|

||||

runtime.Goexit()

|

||||

}

|

||||

```

|

||||

|

||||

|

|

@ -766,52 +757,52 @@ package main

|

|||

|

||||

// Import Go and NATS packages

|

||||

import (

|

||||

"fmt"

|

||||

"runtime"

|

||||

"fmt"

|

||||

"runtime"

|

||||

|

||||

"github.com/nats-io/stan.go"

|

||||

"github.com/nats-io/stan.go"

|

||||

)

|

||||

|

||||

func main() {

|

||||

|

||||

var stanConnection stan.Conn

|

||||

var stanConnection stan.Conn

|

||||

|

||||

subscribe := func() {

|

||||

fmt.Printf("Subscribing to subject 'bucketevents'\n")

|

||||

stanConnection.Subscribe("bucketevents", func(m *stan.Msg) {

|

||||

subscribe := func() {

|

||||

fmt.Printf("Subscribing to subject 'bucketevents'\n")

|

||||

stanConnection.Subscribe("bucketevents", func(m *stan.Msg) {

|

||||

|

||||

// Handle the message

|

||||

fmt.Printf("Received a message: %s\n", string(m.Data))

|

||||

})

|

||||

}

|

||||

// Handle the message

|

||||

fmt.Printf("Received a message: %s\n", string(m.Data))

|

||||

})

|

||||

}

|

||||

|

||||

|

||||

stanConnection, _ = stan.Connect("test-cluster", "test-client", stan.NatsURL("nats://yourusername:yoursecret@0.0.0.0:4222"), stan.SetConnectionLostHandler(func(c stan.Conn, _ error) {

|

||||

go func() {

|

||||

for {

|

||||

// Reconnect if the connection is lost.

|

||||

if stanConnection == nil || stanConnection.NatsConn() == nil || !stanConnection.NatsConn().IsConnected() {

|

||||

stanConnection, _ = stan.Connect("test-cluster", "test-client", stan.NatsURL("nats://yourusername:yoursecret@0.0.0.0:4222"), stan.SetConnectionLostHandler(func(c stan.Conn, _ error) {

|

||||

if c.NatsConn() != nil {

|

||||

c.NatsConn().Close()

|

||||

}

|

||||

_ = c.Close()

|

||||

}))

|

||||

if stanConnection != nil {

|

||||

subscribe()

|

||||

}

|

||||

stanConnection, _ = stan.Connect("test-cluster", "test-client", stan.NatsURL("nats://yourusername:yoursecret@0.0.0.0:4222"), stan.SetConnectionLostHandler(func(c stan.Conn, _ error) {

|

||||

go func() {

|

||||

for {

|

||||

// Reconnect if the connection is lost.

|

||||

if stanConnection == nil || stanConnection.NatsConn() == nil || !stanConnection.NatsConn().IsConnected() {

|

||||

stanConnection, _ = stan.Connect("test-cluster", "test-client", stan.NatsURL("nats://yourusername:yoursecret@0.0.0.0:4222"), stan.SetConnectionLostHandler(func(c stan.Conn, _ error) {

|

||||

if c.NatsConn() != nil {

|

||||

c.NatsConn().Close()

|

||||

}

|

||||

_ = c.Close()

|

||||

}))

|

||||

if stanConnection != nil {

|

||||

subscribe()

|

||||

}

|

||||

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

}()

|

||||

}))

|

||||

}()

|

||||

}))

|

||||

|

||||

// Subscribe to subject

|

||||

subscribe()

|

||||

// Subscribe to subject

|

||||

subscribe()

|

||||

|

||||

// Keep the connection alive

|

||||

runtime.Goexit()

|

||||

// Keep the connection alive

|

||||

runtime.Goexit()

|

||||

}

|

||||

|

||||

```

|

||||

|

|

@ -834,8 +825,6 @@ The example `nats.go` program prints event notification to console.

|

|||

Received a message: {"EventType":"s3:ObjectCreated:Put","Key":"images/myphoto.jpg","Records":[{"eventVersion":"2.0","eventSource":"minio:s3","awsRegion":"","eventTime":"2017-07-07T18:46:37Z","eventName":"s3:ObjectCreated:Put","userIdentity":{"principalId":"minio"},"requestParameters":{"sourceIPAddress":"192.168.1.80:55328"},"responseElements":{"x-amz-request-id":"14CF20BD1EFD5B93","x-minio-origin-endpoint":"http://127.0.0.1:9000"},"s3":{"s3SchemaVersion":"1.0","configurationId":"Config","bucket":{"name":"images","ownerIdentity":{"principalId":"minio"},"arn":"arn:aws:s3:::images"},"object":{"key":"myphoto.jpg","size":248682,"eTag":"f1671feacb8bbf7b0397c6e9364e8c92","contentType":"image/jpeg","userDefined":{"content-type":"image/jpeg"},"versionId":"1","sequencer":"14CF20BD1EFD5B93"}},"source":{"host":"192.168.1.80","port":"55328","userAgent":"MinIO (linux; amd64) minio-go/2.0.4 mc/DEVELOPMENT.GOGET"}}],"level":"info","msg":"","time":"2017-07-07T11:46:37-07:00"}

|

||||

```

|

||||

|

||||

<a name="PostgreSQL"></a>

|

||||