mirror of https://github.com/minio/minio.git

Compare commits

285 Commits

RELEASE.20

...

master

| Author | SHA1 | Date |

|---|---|---|

|

|

ba54b39c02 | |

|

|

2a75225569 | |

|

|

e72429c79c | |

|

|

c5b3f5553f | |

|

|

d3ae0aaad3 | |

|

|

d67bccf861 | |

|

|

1277ad69a6 | |

|

|

8f93e81afb | |

|

|

4af31e654b | |

|

|

aad50579ba | |

|

|

38d059b0ae | |

|

|

bd4eeb4522 | |

|

|

03e3493288 | |

|

|

64baedf5a4 | |

|

|

2f64d5f77e | |

|

|

f79a4ef4d0 | |

|

|

2d53854b19 | |

|

|

e5c83535af | |

|

|

c904ef966e | |

|

|

8f266e0772 | |

|

|

e0fe7cc391 | |

|

|

9d20dec56a | |

|

|

597a785253 | |

|

|

7d75b1e758 | |

|

|

5f78691fcf | |

|

|

a591e06ae5 | |

|

|

443c93c634 | |

|

|

5659cddc84 | |

|

|

2a03a34bde | |

|

|

1654a9b7e6 | |

|

|

673a521711 | |

|

|

2e23076688 | |

|

|

b92ac55250 | |

|

|

7981509cc8 | |

|

|

6d5bc045bc | |

|

|

d38e020b29 | |

|

|

7d29030292 | |

|

|

7c7650b7c3 | |

|

|

ca80eced24 | |

|

|

d0e0b81d8e | |

|

|

391baa1c9a | |

|

|

ae14681c3e | |

|

|

4d698841f4 | |

|

|

9906b3ade9 | |

|

|

bf1769d3e0 | |

|

|

63e1ad9f29 | |

|

|

2c7bcee53f | |

|

|

1fd90c93ff | |

|

|

e947a844c9 | |

|

|

4e2d39293a | |

|

|

1228d6bf1a | |

|

|

fc4561c64c | |

|

|

3b7747b42b | |

|

|

e432e79324 | |

|

|

08d74819b6 | |

|

|

aa3fde1784 | |

|

|

0b3eb7f218 | |

|

|

69c9496c71 | |

|

|

b792b36495 | |

|

|

d3db7d31a3 | |

|

|

c05ca63158 | |

|

|

6d3e0c7db6 | |

|

|

0e59e50b39 | |

|

|

d4b391de1b | |

|

|

de4d3dac00 | |

|

|

534e7161df | |

|

|

9b219cd646 | |

|

|

3bab4822f3 | |

|

|

3c5f2d8916 | |

|

|

5808190398 | |

|

|

b2a82248b1 | |

|

|

4e5fcca8b9 | |

|

|

c36eaedb93 | |

|

|

7752b03add | |

|

|

01bfc78535 | |

|

|

074d70112d | |

|

|

e8d14c0d90 | |

|

|

60d7e8143a | |

|

|

9667a170de | |

|

|

abae30f9e1 | |

|

|

f9311bc9d1 | |

|

|

b598402738 | |

|

|

bd026b913f | |

|

|

72ff69d9bb | |

|

|

f30417d9a8 | |

|

|

47a4ad3cd7 | |

|

|

2f7a10ab31 | |

|

|

b534dc69ab | |

|

|

7b7d2ea7d4 | |

|

|

e00de1c302 | |

|

|

3549e583a6 | |

|

|

f5e3eedf34 | |

|

|

519dbfebf6 | |

|

|

9a267f9270 | |

|

|

67bd71b7a5 | |

|

|

ec49fff583 | |

|

|

8b660e18f2 | |

|

|

981497799a | |

|

|

b9bdc17465 | |

|

|

b413ff9fdb | |

|

|

6a15580817 | |

|

|

39633a5581 | |

|

|

1e83f15e2f | |

|

|

888d2bb1d8 | |

|

|

847ee5ac45 | |

|

|

9a9a49aa84 | |

|

|

a03ca80269 | |

|

|

523bd769f1 | |

|

|

8ff70ea5a9 | |

|

|

da3e7747ca | |

|

|

4afb59e63f | |

|

|

1526e7ece3 | |

|

|

6c07bfee8a | |

|

|

446c760820 | |

|

|

04f92f1291 | |

|

|

4a60a7794d | |

|

|

e5b16adb1c | |

|

|

402a3ac719 | |

|

|

f3d61c51fc | |

|

|

0cde17ae5d | |

|

|

8c1bba681b | |

|

|

dbfb5e797b | |

|

|

08ff702434 | |

|

|

0e2148264a | |

|

|

a75f42344b | |

|

|

7926401cbd | |

|

|

8161411c5d | |

|

|

f64dea2aac | |

|

|

6579304d8c | |

|

|

6bb10a81a6 | |

|

|

3cf8a7c888 | |

|

|

2e38bb5175 | |

|

|

a372c6a377 | |

|

|

93b2f8a0c5 | |

|

|

1a6568a25d | |

|

|

9e95703efc | |

|

|

d8e05aca81 | |

|

|

410a1ac040 | |

|

|

4caa3422bd | |

|

|

a658b976f5 | |

|

|

135874ebdc | |

|

|

f4f1c42cba | |

|

|

e7aa26dc29 | |

|

|

c54ffde568 | |

|

|

9a3c992d7a | |

|

|

0c855638de | |

|

|

943d815783 | |

|

|

4c0acba62d | |

|

|

62c3cdee75 | |

|

|

3212d0c8cd | |

|

|

1d03bea965 | |

|

|

fbfeb59658 | |

|

|

701da1282a | |

|

|

df93ff92ba | |

|

|

77d5331e85 | |

|

|

14cdadfb56 | |

|

|

f3a52cc195 | |

|

|

7640cd24c9 | |

|

|

f7b665347e | |

|

|

9693c382a8 | |

|

|

ee1047bd52 | |

|

|

5ea5ab162b | |

|

|

b5a09ff96b | |

|

|

95c65f4e8f | |

|

|

6bfff7532e | |

|

|

1aa8896ad6 | |

|

|

3e32ceb39f | |

|

|

ca1350b092 | |

|

|

9205434ed3 | |

|

|

cd50e9b4bc | |

|

|

ec816f3840 | |

|

|

5f774951b1 | |

|

|

2ca9befd2a | |

|

|

72f5cb577e | |

|

|

928c0181bf | |

|

|

03767d26da | |

|

|

108e6f92d4 | |

|

|

d653a59fc0 | |

|

|

01bfdf949a | |

|

|

98f7821eb3 | |

|

|

2d3898e0d5 | |

|

|

ae46ce9937 | |

|

|

dfc112c06b | |

|

|

ca5fab8656 | |

|

|

6df76ca73c | |

|

|

f65dd3e5a2 | |

|

|

a8d601b64a | |

|

|

73b4794cf7 | |

|

|

e2709ea129 | |

|

|

740ec80819 | |

|

|

d95e054282 | |

|

|

7c1f9667d1 | |

|

|

9246990496 | |

|

|

0cf3d93360 | |

|

|

cb06aee5ac | |

|

|

1c70e9ed1b | |

|

|

f3d6a2dd37 | |

|

|

d1c58fc2eb | |

|

|

b8f05b1471 | |

|

|

e7baf78ee8 | |

|

|

87299eba10 | |

|

|

d3a07c29ba | |

|

|

8d39b715dc | |

|

|

7e3166475d | |

|

|

5206c0e883 | |

|

|

41ec038523 | |

|

|

08d3d06a06 | |

|

|

074febd9e1 | |

|

|

aa8d25797b | |

|

|

8d7d4adb91 | |

|

|

ffa91f9794 | |

|

|

0c31e61343 | |

|

|

9b926f7dbe | |

|

|

35d8728990 | |

|

|

f7ed9a75ba | |

|

|

9496c17e13 | |

|

|

ed64e91f06 | |

|

|

a481825ae1 | |

|

|

7bb0f32332 | |

|

|

c6f8dc431e | |

|

|

78f177b8ee | |

|

|

787c44c39d | |

|

|

f06fee0364 | |

|

|

c957e0d426 | |

|

|

04101d472f | |

|

|

51fc145161 | |

|

|

9d63bb1b41 | |

|

|

8ff2a7a2b9 | |

|

|

91f91d8f47 | |

|

|

a207bd6790 | |

|

|

96d226c0b1 | |

|

|

a86d98826d | |

|

|

1bb670ecba | |

|

|

c9e9a8e2b9 | |

|

|

272367ccd2 | |

|

|

95bf4a57b6 | |

|

|

2228eb61cb | |

|

|

5f07eb2d17 | |

|

|

d96d696841 | |

|

|

e18c0ab9bf | |

|

|

faeb2b7e79 | |

|

|

97ce11cb6b | |

|

|

d7daae4762 | |

|

|

3d86ae12bc | |

|

|

ba46ee5dfa | |

|

|

912bbb2f1d | |

|

|

4f660a8eb7 | |

|

|

ae4fb1b72e | |

|

|

b435806d91 | |

|

|

06929258bc | |

|

|

cb577835d9 | |

|

|

7f35f74f14 | |

|

|

3d6194e93c | |

|

|

72c7845f7e | |

|

|

1c99597a06 | |

|

|

feb9d8480b | |

|

|

4e670458b8 | |

|

|

48deccdc40 | |

|

|

2eee744e34 | |

|

|

3f72439b8a | |

|

|

468a9fae83 | |

|

|

d87f91720b | |

|

|

aa0eec16ab | |

|

|

d63e603040 | |

|

|

8222a640ac | |

|

|

7e45d84ace | |

|

|

139a606f0a | |

|

|

289223b6de | |

|

|

c61dd16a1e | |

|

|

3e38fa54a5 | |

|

|

4a02189ba0 | |

|

|

3d4fc28ec9 | |

|

|

ec3a3bb10d | |

|

|

364d3a0ac9 | |

|

|

cb536a73eb | |

|

|

428155add9 | |

|

|

8bce123bba | |

|

|

0a56dbde2f | |

|

|

7ff4164d65 | |

|

|

53a14c7301 | |

|

|

dc45a5010d | |

|

|

4b9192034c | |

|

|

deeadd1a37 | |

|

|

1fc4203c19 | |

|

|

15b930be1f |

|

|

@ -9,6 +9,6 @@ jobs:

|

|||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: 'Checkout Repository'

|

||||

uses: actions/checkout@v3

|

||||

uses: actions/checkout@v4

|

||||

- name: 'Dependency Review'

|

||||

uses: actions/dependency-review-action@v1

|

||||

uses: actions/dependency-review-action@v4

|

||||

|

|

|

|||

|

|

@ -3,12 +3,11 @@ name: Crosscompile

|

|||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

- master

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

concurrency:

|

||||

concurrency:

|

||||

group: ${{ github.workflow }}-${{ github.head_ref }}

|

||||

cancel-in-progress: true

|

||||

|

||||

|

|

@ -21,11 +20,11 @@ jobs:

|

|||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.21.x]

|

||||

go-version: [1.22.x]

|

||||

os: [ubuntu-latest]

|

||||

steps:

|

||||

- uses: actions/checkout@ac593985615ec2ede58e132d2e21d2b1cbd6127c # v3

|

||||

- uses: actions/setup-go@6edd4406fa81c3da01a34fa6f6343087c207a568 # v3

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

check-latest: true

|

||||

|

|

|

|||

|

|

@ -3,8 +3,7 @@ name: FIPS Build Test

|

|||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

- master

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

|

|

@ -21,11 +20,11 @@ jobs:

|

|||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.21.x]

|

||||

go-version: [1.22.x]

|

||||

os: [ubuntu-latest]

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-go@v3

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

|

||||

|

|

|

|||

|

|

@ -3,8 +3,7 @@ name: Healing Functional Tests

|

|||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

- master

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

|

|

@ -21,11 +20,11 @@ jobs:

|

|||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.21.x]

|

||||

go-version: [1.22.x]

|

||||

os: [ubuntu-latest]

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-go@v3

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

check-latest: true

|

||||

|

|

|

|||

|

|

@ -3,12 +3,11 @@ name: Linters and Tests

|

|||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

- master

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

concurrency:

|

||||

concurrency:

|

||||

group: ${{ github.workflow }}-${{ github.head_ref }}

|

||||

cancel-in-progress: true

|

||||

|

||||

|

|

@ -21,23 +20,24 @@ jobs:

|

|||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.21.x]

|

||||

os: [ubuntu-latest, windows-latest]

|

||||

go-version: [1.22.x]

|

||||

os: [ubuntu-latest, Windows]

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-go@v3

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

check-latest: true

|

||||

- name: Build on ${{ matrix.os }}

|

||||

if: matrix.os == 'windows-latest'

|

||||

if: matrix.os == 'Windows'

|

||||

env:

|

||||

CGO_ENABLED: 0

|

||||

GO111MODULE: on

|

||||

run: |

|

||||

Set-MpPreference -DisableRealtimeMonitoring $true

|

||||

netsh int ipv4 set dynamicport tcp start=60000 num=61000

|

||||

go build --ldflags="-s -w" -o %GOPATH%\bin\minio.exe

|

||||

go test -v --timeout 50m ./...

|

||||

go test -v --timeout 120m ./...

|

||||

- name: Build on ${{ matrix.os }}

|

||||

if: matrix.os == 'ubuntu-latest'

|

||||

env:

|

||||

|

|

|

|||

|

|

@ -3,8 +3,7 @@ name: Functional Tests

|

|||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

- master

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

|

|

@ -21,11 +20,11 @@ jobs:

|

|||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.21.x]

|

||||

go-version: [1.22.x]

|

||||

os: [ubuntu-latest]

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-go@v3

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

check-latest: true

|

||||

|

|

|

|||

|

|

@ -3,8 +3,7 @@ name: Helm Chart linting

|

|||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

- master

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

|

|

@ -20,7 +19,7 @@ jobs:

|

|||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v3

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Install Helm

|

||||

uses: azure/setup-helm@v3

|

||||

|

|

|

|||

|

|

@ -3,8 +3,7 @@ name: IAM integration

|

|||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

- master

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

|

|

@ -62,7 +61,7 @@ jobs:

|

|||

# are turned off - i.e. if ldap="", then ldap server is not enabled for

|

||||

# the tests.

|

||||

matrix:

|

||||

go-version: [1.21.x]

|

||||

go-version: [1.22.x]

|

||||

ldap: ["", "localhost:389"]

|

||||

etcd: ["", "http://localhost:2379"]

|

||||

openid: ["", "http://127.0.0.1:5556/dex"]

|

||||

|

|

@ -76,8 +75,8 @@ jobs:

|

|||

openid: "http://127.0.0.1:5556/dex"

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-go@v3

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

check-latest: true

|

||||

|

|

@ -112,6 +111,12 @@ jobs:

|

|||

sudo sysctl net.ipv6.conf.default.disable_ipv6=0

|

||||

go run docs/iam/access-manager-plugin.go &

|

||||

make test-iam

|

||||

- name: Test MinIO Old Version data to IAM import current version

|

||||

if: matrix.ldap == 'ldaphost:389'

|

||||

env:

|

||||

_MINIO_LDAP_TEST_SERVER: ${{ matrix.ldap }}

|

||||

run: |

|

||||

make test-iam-ldap-upgrade-import

|

||||

- name: Test LDAP for automatic site replication

|

||||

if: matrix.ldap == 'localhost:389'

|

||||

run: |

|

||||

|

|

|

|||

|

|

@ -4,7 +4,6 @@ on:

|

|||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

|

|

@ -25,12 +24,12 @@ jobs:

|

|||

sudo -S rm -rf ${GITHUB_WORKSPACE}

|

||||

mkdir ${GITHUB_WORKSPACE}

|

||||

- name: checkout-step

|

||||

uses: actions/checkout@v3

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: setup-go-step

|

||||

uses: actions/setup-go@v2

|

||||

uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: 1.21.x

|

||||

go-version: 1.22.x

|

||||

|

||||

- name: github sha short

|

||||

id: vars

|

||||

|

|

@ -56,6 +55,10 @@ jobs:

|

|||

run: |

|

||||

${GITHUB_WORKSPACE}/.github/workflows/run-mint.sh "erasure" "minio" "minio123" "${{ steps.vars.outputs.sha_short }}"

|

||||

|

||||

- name: resiliency

|

||||

run: |

|

||||

${GITHUB_WORKSPACE}/.github/workflows/run-mint.sh "resiliency" "minio" "minio123" "${{ steps.vars.outputs.sha_short }}"

|

||||

|

||||

- name: The job must cleanup

|

||||

if: ${{ always() }}

|

||||

run: |

|

||||

|

|

|

|||

|

|

@ -0,0 +1,78 @@

|

|||

version: '3.7'

|

||||

|

||||

# Settings and configurations that are common for all containers

|

||||

x-minio-common: &minio-common

|

||||

image: quay.io/minio/minio:${JOB_NAME}

|

||||

command: server --console-address ":9001" http://minio{1...4}/rdata{1...2}

|

||||

expose:

|

||||

- "9000"

|

||||

- "9001"

|

||||

environment:

|

||||

MINIO_CI_CD: "on"

|

||||

MINIO_ROOT_USER: "minio"

|

||||

MINIO_ROOT_PASSWORD: "minio123"

|

||||

MINIO_KMS_SECRET_KEY: "my-minio-key:OSMM+vkKUTCvQs9YL/CVMIMt43HFhkUpqJxTmGl6rYw="

|

||||

MINIO_DRIVE_MAX_TIMEOUT: "5s"

|

||||

healthcheck:

|

||||

test: ["CMD", "mc", "ready", "local"]

|

||||

interval: 5s

|

||||

timeout: 5s

|

||||

retries: 5

|

||||

|

||||

# starts 4 docker containers running minio server instances.

|

||||

# using nginx reverse proxy, load balancing, you can access

|

||||

# it through port 9000.

|

||||

services:

|

||||

minio1:

|

||||

<<: *minio-common

|

||||

hostname: minio1

|

||||

volumes:

|

||||

- rdata1-1:/rdata1

|

||||

- rdata1-2:/rdata2

|

||||

|

||||

minio2:

|

||||

<<: *minio-common

|

||||

hostname: minio2

|

||||

volumes:

|

||||

- rdata2-1:/rdata1

|

||||

- rdata2-2:/rdata2

|

||||

|

||||

minio3:

|

||||

<<: *minio-common

|

||||

hostname: minio3

|

||||

volumes:

|

||||

- rdata3-1:/rdata1

|

||||

- rdata3-2:/rdata2

|

||||

|

||||

minio4:

|

||||

<<: *minio-common

|

||||

hostname: minio4

|

||||

volumes:

|

||||

- rdata4-1:/rdata1

|

||||

- rdata4-2:/rdata2

|

||||

|

||||

nginx:

|

||||

image: nginx:1.19.2-alpine

|

||||

hostname: nginx

|

||||

volumes:

|

||||

- ./nginx-4-node.conf:/etc/nginx/nginx.conf:ro

|

||||

ports:

|

||||

- "9000:9000"

|

||||

- "9001:9001"

|

||||

depends_on:

|

||||

- minio1

|

||||

- minio2

|

||||

- minio3

|

||||

- minio4

|

||||

|

||||

## By default this config uses default local driver,

|

||||

## For custom volumes replace with volume driver configuration.

|

||||

volumes:

|

||||

rdata1-1:

|

||||

rdata1-2:

|

||||

rdata2-1:

|

||||

rdata2-2:

|

||||

rdata3-1:

|

||||

rdata3-2:

|

||||

rdata4-1:

|

||||

rdata4-2:

|

||||

|

|

@ -23,10 +23,9 @@ http {

|

|||

# include /etc/nginx/conf.d/*.conf;

|

||||

|

||||

upstream minio {

|

||||

server minio1:9000;

|

||||

server minio2:9000;

|

||||

server minio3:9000;

|

||||

server minio4:9000;

|

||||

server minio1:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio2:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio3:9000 max_fails=1 fail_timeout=10s;

|

||||

}

|

||||

|

||||

upstream console {

|

||||

|

|

|

|||

|

|

@ -23,14 +23,14 @@ http {

|

|||

# include /etc/nginx/conf.d/*.conf;

|

||||

|

||||

upstream minio {

|

||||

server minio1:9000;

|

||||

server minio2:9000;

|

||||

server minio3:9000;

|

||||

server minio4:9000;

|

||||

server minio5:9000;

|

||||

server minio6:9000;

|

||||

server minio7:9000;

|

||||

server minio8:9000;

|

||||

server minio1:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio2:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio3:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio4:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio5:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio6:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio7:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio8:9000 max_fails=1 fail_timeout=10s;

|

||||

}

|

||||

|

||||

upstream console {

|

||||

|

|

|

|||

|

|

@ -23,10 +23,10 @@ http {

|

|||

# include /etc/nginx/conf.d/*.conf;

|

||||

|

||||

upstream minio {

|

||||

server minio1:9000;

|

||||

server minio2:9000;

|

||||

server minio3:9000;

|

||||

server minio4:9000;

|

||||

server minio1:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio2:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio3:9000 max_fails=1 fail_timeout=10s;

|

||||

server minio4:9000 max_fails=1 fail_timeout=10s;

|

||||

}

|

||||

|

||||

upstream console {

|

||||

|

|

|

|||

|

|

@ -24,11 +24,6 @@ if [ ! -f ./mc ]; then

|

|||

chmod +x mc

|

||||

fi

|

||||

|

||||

(

|

||||

cd ./docs/debugging/s3-check-md5

|

||||

go install -v

|

||||

)

|

||||

|

||||

export RELEASE=RELEASE.2023-08-29T23-07-35Z

|

||||

|

||||

docker-compose -f docker-compose-site1.yaml up -d

|

||||

|

|

@ -48,10 +43,10 @@ sleep 30s

|

|||

|

||||

sleep 5

|

||||

|

||||

s3-check-md5 -h

|

||||

./s3-check-md5 -h

|

||||

|

||||

failed_count_site1=$(s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site1-nginx:9001 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

failed_count_site2=$(s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site2-nginx:9002 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

failed_count_site1=$(./s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site1-nginx:9001 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

failed_count_site2=$(./s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site2-nginx:9002 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

|

||||

if [ $failed_count_site1 -ne 0 ]; then

|

||||

echo "failed with multipart on site1 uploads"

|

||||

|

|

@ -67,8 +62,8 @@ fi

|

|||

|

||||

sleep 5

|

||||

|

||||

failed_count_site1=$(s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site1-nginx:9001 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

failed_count_site2=$(s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site2-nginx:9002 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

failed_count_site1=$(./s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site1-nginx:9001 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

failed_count_site2=$(./s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site2-nginx:9002 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

|

||||

## we do not need to fail here, since we are going to test

|

||||

## upgrading to master, healing and being able to recover

|

||||

|

|

@ -96,8 +91,8 @@ for i in $(seq 1 10); do

|

|||

./mc admin heal -r --remove --json site2/ 2>&1 >/dev/null

|

||||

done

|

||||

|

||||

failed_count_site1=$(s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site1-nginx:9001 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

failed_count_site2=$(s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site2-nginx:9002 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

failed_count_site1=$(./s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site1-nginx:9001 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

failed_count_site2=$(./s3-check-md5 -versions -access-key minioadmin -secret-key minioadmin -endpoint http://site2-nginx:9002 -bucket testbucket 2>&1 | grep FAILED | wc -l)

|

||||

|

||||

if [ $failed_count_site1 -ne 0 ]; then

|

||||

echo "failed with multipart on site1 uploads"

|

||||

|

|

@ -109,6 +104,43 @@ if [ $failed_count_site2 -ne 0 ]; then

|

|||

exit 1

|

||||

fi

|

||||

|

||||

# Add user group test

|

||||

./mc admin user add site1 site-replication-issue-user site-replication-issue-password

|

||||

./mc admin group add site1 site-replication-issue-group site-replication-issue-user

|

||||

|

||||

max_wait_attempts=30

|

||||

wait_interval=5

|

||||

|

||||

attempt=1

|

||||

while true; do

|

||||

diff <(./mc admin group info site1 site-replication-issue-group) <(./mc admin group info site2 site-replication-issue-group)

|

||||

|

||||

if [[ $? -eq 0 ]]; then

|

||||

echo "Outputs are consistent."

|

||||

break

|

||||

fi

|

||||

|

||||

remaining_attempts=$((max_wait_attempts - attempt))

|

||||

if ((attempt >= max_wait_attempts)); then

|

||||

echo "Outputs remain inconsistent after $max_wait_attempts attempts. Exiting with error."

|

||||

exit 1

|

||||

else

|

||||

echo "Outputs are inconsistent. Waiting for $wait_interval seconds (attempt $attempt/$max_wait_attempts)."

|

||||

sleep $wait_interval

|

||||

fi

|

||||

|

||||

((attempt++))

|

||||

done

|

||||

|

||||

status=$(./mc admin group info site1 site-replication-issue-group --json | jq .groupStatus | tr -d '"')

|

||||

|

||||

if [[ $status == "enabled" ]]; then

|

||||

echo "Success"

|

||||

else

|

||||

echo "Expected status: enabled, actual status: $status"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

cleanup

|

||||

|

||||

## change working directory

|

||||

|

|

|

|||

|

|

@ -3,8 +3,7 @@ name: MinIO advanced tests

|

|||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

- master

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

|

|

@ -22,11 +21,11 @@ jobs:

|

|||

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.21.x]

|

||||

go-version: [1.22.x]

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-go@v3

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

check-latest: true

|

||||

|

|

@ -36,6 +35,18 @@ jobs:

|

|||

sudo sysctl net.ipv6.conf.default.disable_ipv6=0

|

||||

make test-decom

|

||||

|

||||

- name: Test ILM

|

||||

run: |

|

||||

sudo sysctl net.ipv6.conf.all.disable_ipv6=0

|

||||

sudo sysctl net.ipv6.conf.default.disable_ipv6=0

|

||||

make test-ilm

|

||||

|

||||

- name: Test PBAC

|

||||

run: |

|

||||

sudo sysctl net.ipv6.conf.all.disable_ipv6=0

|

||||

sudo sysctl net.ipv6.conf.default.disable_ipv6=0

|

||||

make test-pbac

|

||||

|

||||

- name: Test Config File

|

||||

run: |

|

||||

sudo sysctl net.ipv6.conf.all.disable_ipv6=0

|

||||

|

|

|

|||

|

|

@ -3,8 +3,7 @@ name: Root lockdown tests

|

|||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

- master

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

|

|

@ -21,12 +20,12 @@ jobs:

|

|||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.21.x]

|

||||

go-version: [1.22.x]

|

||||

os: [ubuntu-latest]

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-go@v3

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

check-latest: true

|

||||

|

|

|

|||

|

|

@ -16,7 +16,7 @@ docker volume rm $(docker volume ls -f dangling=true) || true

|

|||

cd .github/workflows/mint

|

||||

|

||||

docker-compose -f minio-${MODE}.yaml up -d

|

||||

sleep 30s

|

||||

sleep 1m

|

||||

|

||||

docker system prune -f || true

|

||||

docker volume prune -f || true

|

||||

|

|

@ -26,6 +26,9 @@ docker volume rm $(docker volume ls -q -f dangling=true) || true

|

|||

[ "${MODE}" == "pools" ] && docker-compose -f minio-${MODE}.yaml stop minio2

|

||||

[ "${MODE}" == "pools" ] && docker-compose -f minio-${MODE}.yaml stop minio6

|

||||

|

||||

# Pause one node, to check that all S3 calls work while one node goes wrong

|

||||

[ "${MODE}" == "resiliency" ] && docker-compose -f minio-${MODE}.yaml pause minio4

|

||||

|

||||

docker run --rm --net=mint_default \

|

||||

--name="mint-${MODE}-${JOB_NAME}" \

|

||||

-e SERVER_ENDPOINT="nginx:9000" \

|

||||

|

|

@ -35,6 +38,18 @@ docker run --rm --net=mint_default \

|

|||

-e MINT_MODE="${MINT_MODE}" \

|

||||

docker.io/minio/mint:edge

|

||||

|

||||

# FIXME: enable this after fixing aws-sdk-java-v2 tests

|

||||

# # unpause the node, to check that all S3 calls work while one node goes wrong

|

||||

# [ "${MODE}" == "resiliency" ] && docker-compose -f minio-${MODE}.yaml unpause minio4

|

||||

# [ "${MODE}" == "resiliency" ] && docker run --rm --net=mint_default \

|

||||

# --name="mint-${MODE}-${JOB_NAME}" \

|

||||

# -e SERVER_ENDPOINT="nginx:9000" \

|

||||

# -e ACCESS_KEY="${ACCESS_KEY}" \

|

||||

# -e SECRET_KEY="${SECRET_KEY}" \

|

||||

# -e ENABLE_HTTPS=0 \

|

||||

# -e MINT_MODE="${MINT_MODE}" \

|

||||

# docker.io/minio/mint:edge

|

||||

|

||||

docker-compose -f minio-${MODE}.yaml down || true

|

||||

sleep 10s

|

||||

|

||||

|

|

|

|||

|

|

@ -3,8 +3,7 @@ name: Shell formatting checks

|

|||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

- master

|

||||

|

||||

permissions:

|

||||

contents: read

|

||||

|

|

@ -14,7 +13,7 @@ jobs:

|

|||

name: runner / shfmt

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/checkout@v4

|

||||

- uses: luizm/action-sh-checker@master

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

|

|

|||

|

|

@ -1,5 +1,5 @@

|

|||

---

|

||||

name: Test GitHub Action

|

||||

name: Spelling

|

||||

on: [pull_request]

|

||||

|

||||

jobs:

|

||||

|

|

|

|||

|

|

@ -3,8 +3,7 @@ name: Upgrade old version tests

|

|||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- master

|

||||

- next

|

||||

- master

|

||||

|

||||

# This ensures that previous jobs for the PR are canceled when the PR is

|

||||

# updated.

|

||||

|

|

@ -21,12 +20,12 @@ jobs:

|

|||

runs-on: ${{ matrix.os }}

|

||||

strategy:

|

||||

matrix:

|

||||

go-version: [1.21.x]

|

||||

go-version: [1.22.x]

|

||||

os: [ubuntu-latest]

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- uses: actions/setup-go@v3

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: ${{ matrix.go-version }}

|

||||

check-latest: true

|

||||

|

|

|

|||

|

|

@ -17,15 +17,15 @@ jobs:

|

|||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Check out code into the Go module directory

|

||||

uses: actions/checkout@v3

|

||||

uses: actions/checkout@v4

|

||||

- name: Set up Go

|

||||

uses: actions/setup-go@v3

|

||||

uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: 1.21.8

|

||||

go-version: 1.22.3

|

||||

check-latest: true

|

||||

- name: Get official govulncheck

|

||||

run: go install golang.org/x/vuln/cmd/govulncheck@latest

|

||||

shell: bash

|

||||

- name: Run govulncheck

|

||||

run: govulncheck ./...

|

||||

run: govulncheck -show verbose ./...

|

||||

shell: bash

|

||||

|

|

|

|||

|

|

@ -43,4 +43,13 @@ docs/debugging/inspect/inspect

|

|||

docs/debugging/pprofgoparser/pprofgoparser

|

||||

docs/debugging/reorder-disks/reorder-disks

|

||||

docs/debugging/populate-hard-links/populate-hardlinks

|

||||

docs/debugging/xattr/xattr

|

||||

docs/debugging/xattr/xattr

|

||||

hash-set

|

||||

healing-bin

|

||||

inspect

|

||||

pprofgoparser

|

||||

reorder-disks

|

||||

s3-check-md5

|

||||

s3-verify

|

||||

xattr

|

||||

xl-meta

|

||||

|

|

|

|||

17

.typos.toml

17

.typos.toml

|

|

@ -12,20 +12,27 @@ extend-ignore-re = [

|

|||

"[0-9A-Za-z/+=]{64}",

|

||||

"ZXJuZXQxDjAMBgNVBA-some-junk-Q4wDAYDVQQLEwVNaW5pbzEOMAwGA1UEAxMF",

|

||||

"eyJmb28iOiJiYXIifQ",

|

||||

"eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiJ9.*",

|

||||

"MIIDBTCCAe2gAwIBAgIQWHw7h.*",

|

||||

'http\.Header\{"X-Amz-Server-Side-Encryptio":',

|

||||

'sessionToken',

|

||||

"ZoEoZdLlzVbOlT9rbhD7ZN7TLyiYXSAlB79uGEge",

|

||||

]

|

||||

|

||||

[default.extend-words]

|

||||

"encrypter" = "encrypter"

|

||||

"kms" = "kms"

|

||||

"requestor" = "requestor"

|

||||

|

||||

[default.extend-identifiers]

|

||||

"bui" = "bui"

|

||||

"toi" = "toi"

|

||||

"ot" = "ot"

|

||||

"dm2nd" = "dm2nd"

|

||||

"HashiCorp" = "HashiCorp"

|

||||

|

||||

[type.go.extend-identifiers]

|

||||

"bui" = "bui"

|

||||

"dm2nd" = "dm2nd"

|

||||

"ot" = "ot"

|

||||

"ParseND" = "ParseND"

|

||||

"ParseNDStream" = "ParseNDStream"

|

||||

"pn" = "pn"

|

||||

"TestGetPartialObjectMisAligned" = "TestGetPartialObjectMisAligned"

|

||||

"thr" = "thr"

|

||||

"toi" = "toi"

|

||||

|

|

|

|||

|

|

@ -21,6 +21,11 @@ RUN curl -s -q https://dl.min.io/client/mc/release/linux-${TARGETARCH}/mc -o /go

|

|||

curl -s -q https://dl.min.io/client/mc/release/linux-${TARGETARCH}/mc.minisig -o /go/bin/mc.minisig && \

|

||||

chmod +x /go/bin/mc

|

||||

|

||||

RUN if [ "$TARGETARCH" = "amd64" ]; then \

|

||||

curl -L -s -q https://github.com/moparisthebest/static-curl/releases/latest/download/curl-${TARGETARCH} -o /go/bin/curl; \

|

||||

chmod +x /go/bin/curl; \

|

||||

fi

|

||||

|

||||

# Verify binary signature using public key "RWTx5Zr1tiHQLwG9keckT0c45M3AGeHD6IvimQHpyRywVWGbP1aVSGavRUN"

|

||||

RUN minisign -Vqm /go/bin/minio -x /go/bin/minio.minisig -P RWTx5Zr1tiHQLwG9keckT0c45M3AGeHD6IvimQHpyRywVWGbP1aVSGav && \

|

||||

minisign -Vqm /go/bin/mc -x /go/bin/mc.minisig -P RWTx5Zr1tiHQLwG9keckT0c45M3AGeHD6IvimQHpyRywVWGbP1aVSGav

|

||||

|

|

@ -49,6 +54,7 @@ ENV MINIO_ACCESS_KEY_FILE=access_key \

|

|||

COPY --from=build /etc/ssl/certs/ca-certificates.crt /etc/ssl/certs/

|

||||

COPY --from=build /go/bin/minio /usr/bin/minio

|

||||

COPY --from=build /go/bin/mc /usr/bin/mc

|

||||

COPY --from=build /go/bin/cur* /usr/bin/

|

||||

|

||||

COPY CREDITS /licenses/CREDITS

|

||||

COPY LICENSE /licenses/LICENSE

|

||||

|

|

|

|||

|

|

@ -21,6 +21,11 @@ RUN curl -s -q https://dl.min.io/client/mc/release/linux-${TARGETARCH}/mc -o /go

|

|||

curl -s -q https://dl.min.io/client/mc/release/linux-${TARGETARCH}/mc.minisig -o /go/bin/mc.minisig && \

|

||||

chmod +x /go/bin/mc

|

||||

|

||||

RUN if [ "$TARGETARCH" = "amd64" ]; then \

|

||||

curl -L -s -q https://github.com/moparisthebest/static-curl/releases/latest/download/curl-${TARGETARCH} -o /go/bin/curl; \

|

||||

chmod +x /go/bin/curl; \

|

||||

fi

|

||||

|

||||

# Verify binary signature using public key "RWTx5Zr1tiHQLwG9keckT0c45M3AGeHD6IvimQHpyRywVWGbP1aVSGavRUN"

|

||||

RUN minisign -Vqm /go/bin/minio -x /go/bin/minio.minisig -P RWTx5Zr1tiHQLwG9keckT0c45M3AGeHD6IvimQHpyRywVWGbP1aVSGav && \

|

||||

minisign -Vqm /go/bin/mc -x /go/bin/mc.minisig -P RWTx5Zr1tiHQLwG9keckT0c45M3AGeHD6IvimQHpyRywVWGbP1aVSGav

|

||||

|

|

@ -49,6 +54,7 @@ ENV MINIO_ACCESS_KEY_FILE=access_key \

|

|||

COPY --from=build /etc/ssl/certs/ca-certificates.crt /etc/ssl/certs/

|

||||

COPY --from=build /go/bin/minio /usr/bin/minio

|

||||

COPY --from=build /go/bin/mc /usr/bin/mc

|

||||

COPY --from=build /go/bin/cur* /usr/bin/

|

||||

|

||||

COPY CREDITS /licenses/CREDITS

|

||||

COPY LICENSE /licenses/LICENSE

|

||||

|

|

|

|||

|

|

@ -16,6 +16,11 @@ RUN curl -s -q https://dl.min.io/server/minio/release/linux-${TARGETARCH}/archiv

|

|||

curl -s -q https://dl.min.io/server/minio/release/linux-${TARGETARCH}/archive/minio.${RELEASE}.fips.minisig -o /go/bin/minio.minisig && \

|

||||

chmod +x /go/bin/minio

|

||||

|

||||

RUN if [ "$TARGETARCH" = "amd64" ]; then \

|

||||

curl -L -s -q https://github.com/moparisthebest/static-curl/releases/latest/download/curl-${TARGETARCH} -o /go/bin/curl; \

|

||||

chmod +x /go/bin/curl; \

|

||||

fi

|

||||

|

||||

# Verify binary signature using public key "RWTx5Zr1tiHQLwG9keckT0c45M3AGeHD6IvimQHpyRywVWGbP1aVSGavRUN"

|

||||

RUN minisign -Vqm /go/bin/minio -x /go/bin/minio.minisig -P RWTx5Zr1tiHQLwG9keckT0c45M3AGeHD6IvimQHpyRywVWGbP1aVSGav

|

||||

|

||||

|

|

@ -41,6 +46,7 @@ ENV MINIO_ACCESS_KEY_FILE=access_key \

|

|||

|

||||

COPY --from=build /etc/ssl/certs/ca-certificates.crt /etc/ssl/certs/

|

||||

COPY --from=build /go/bin/minio /usr/bin/minio

|

||||

COPY --from=build /go/bin/cur* /usr/bin/

|

||||

|

||||

COPY CREDITS /licenses/CREDITS

|

||||

COPY LICENSE /licenses/LICENSE

|

||||

|

|

|

|||

|

|

@ -21,6 +21,11 @@ RUN curl -s -q https://dl.min.io/client/mc/release/linux-${TARGETARCH}/mc -o /go

|

|||

curl -s -q https://dl.min.io/client/mc/release/linux-${TARGETARCH}/mc.minisig -o /go/bin/mc.minisig && \

|

||||

chmod +x /go/bin/mc

|

||||

|

||||

RUN if [ "$TARGETARCH" = "amd64" ]; then \

|

||||

curl -L -s -q https://github.com/moparisthebest/static-curl/releases/latest/download/curl-${TARGETARCH} -o /go/bin/curl; \

|

||||

chmod +x /go/bin/curl; \

|

||||

fi

|

||||

|

||||

# Verify binary signature using public key "RWTx5Zr1tiHQLwG9keckT0c45M3AGeHD6IvimQHpyRywVWGbP1aVSGavRUN"

|

||||

RUN minisign -Vqm /go/bin/minio -x /go/bin/minio.minisig -P RWTx5Zr1tiHQLwG9keckT0c45M3AGeHD6IvimQHpyRywVWGbP1aVSGav && \

|

||||

minisign -Vqm /go/bin/mc -x /go/bin/mc.minisig -P RWTx5Zr1tiHQLwG9keckT0c45M3AGeHD6IvimQHpyRywVWGbP1aVSGav

|

||||

|

|

@ -49,6 +54,7 @@ ENV MINIO_ACCESS_KEY_FILE=access_key \

|

|||

COPY --from=build /etc/ssl/certs/ca-certificates.crt /etc/ssl/certs/

|

||||

COPY --from=build /go/bin/minio /usr/bin/minio

|

||||

COPY --from=build /go/bin/mc /usr/bin/mc

|

||||

COPY --from=build /go/bin/cur* /usr/bin/

|

||||

|

||||

COPY CREDITS /licenses/CREDITS

|

||||

COPY LICENSE /licenses/LICENSE

|

||||

|

|

|

|||

49

Makefile

49

Makefile

|

|

@ -45,7 +45,7 @@ lint-fix: getdeps ## runs golangci-lint suite of linters with automatic fixes

|

|||

@$(GOLANGCI) run --build-tags kqueue --timeout=10m --config ./.golangci.yml --fix

|

||||

|

||||

check: test

|

||||

test: verifiers build build-debugging ## builds minio, runs linters, tests

|

||||

test: verifiers build ## builds minio, runs linters, tests

|

||||

@echo "Running unit tests"

|

||||

@MINIO_API_REQUESTS_MAX=10000 CGO_ENABLED=0 go test -v -tags kqueue ./...

|

||||

|

||||

|

|

@ -53,12 +53,21 @@ test-root-disable: install-race

|

|||

@echo "Running minio root lockdown tests"

|

||||

@env bash $(PWD)/buildscripts/disable-root.sh

|

||||

|

||||

test-ilm: install-race

|

||||

@echo "Running ILM tests"

|

||||

@env bash $(PWD)/docs/bucket/replication/setup_ilm_expiry_replication.sh

|

||||

|

||||

test-pbac: install-race

|

||||

@echo "Running bucket policies tests"

|

||||

@env bash $(PWD)/docs/iam/policies/pbac-tests.sh

|

||||

|

||||

test-decom: install-race

|

||||

@echo "Running minio decom tests"

|

||||

@env bash $(PWD)/docs/distributed/decom.sh

|

||||

@env bash $(PWD)/docs/distributed/decom-encrypted.sh

|

||||

@env bash $(PWD)/docs/distributed/decom-encrypted-sse-s3.sh

|

||||

@env bash $(PWD)/docs/distributed/decom-compressed-sse-s3.sh

|

||||

@env bash $(PWD)/docs/distributed/decom-encrypted-kes.sh

|

||||

|

||||

test-versioning: install-race

|

||||

@echo "Running minio versioning tests"

|

||||

|

|

@ -81,6 +90,10 @@ test-iam: build ## verify IAM (external IDP, etcd backends)

|

|||

@echo "Running tests for IAM (external IDP, etcd backends) with -race"

|

||||

@MINIO_API_REQUESTS_MAX=10000 GORACE=history_size=7 CGO_ENABLED=1 go test -race -tags kqueue -v -run TestIAM* ./cmd

|

||||

|

||||

test-iam-ldap-upgrade-import: build ## verify IAM (external LDAP IDP)

|

||||

@echo "Running upgrade tests for IAM (LDAP backend)"

|

||||

@env bash $(PWD)/buildscripts/minio-iam-ldap-upgrade-import-test.sh

|

||||

|

||||

test-sio-error:

|

||||

@(env bash $(PWD)/docs/bucket/replication/sio-error.sh)

|

||||

|

||||

|

|

@ -107,37 +120,39 @@ test-site-replication-oidc: install-race ## verify automatic site replication

|

|||

test-site-replication-minio: install-race ## verify automatic site replication

|

||||

@echo "Running tests for automatic site replication of IAM (with MinIO IDP)"

|

||||

@(env bash $(PWD)/docs/site-replication/run-multi-site-minio-idp.sh)

|

||||

@echo "Running tests for automatic site replication of SSE-C objects"

|

||||

@(env bash $(PWD)/docs/site-replication/run-ssec-object-replication.sh)

|

||||

@echo "Running tests for automatic site replication of SSE-C objects with SSE-KMS enabled for bucket"

|

||||

@(env bash $(PWD)/docs/site-replication/run-sse-kms-object-replication.sh)

|

||||

@echo "Running tests for automatic site replication of SSE-C objects with compression enabled for site"

|

||||

@(env bash $(PWD)/docs/site-replication/run-ssec-object-replication-with-compression.sh)

|

||||

|

||||

verify: ## verify minio various setups

|

||||

verify: install-race ## verify minio various setups

|

||||

@echo "Verifying build with race"

|

||||

@GORACE=history_size=7 CGO_ENABLED=1 go build -race -tags kqueue -trimpath --ldflags "$(LDFLAGS)" -o $(PWD)/minio 1>/dev/null

|

||||

@(env bash $(PWD)/buildscripts/verify-build.sh)

|

||||

|

||||

verify-healing: ## verify healing and replacing disks with minio binary

|

||||

verify-healing: install-race ## verify healing and replacing disks with minio binary

|

||||

@echo "Verify healing build with race"

|

||||

@GORACE=history_size=7 CGO_ENABLED=1 go build -race -tags kqueue -trimpath --ldflags "$(LDFLAGS)" -o $(PWD)/minio 1>/dev/null

|

||||

@(env bash $(PWD)/buildscripts/verify-healing.sh)

|

||||

@(env bash $(PWD)/buildscripts/verify-healing-empty-erasure-set.sh)

|

||||

@(env bash $(PWD)/buildscripts/heal-inconsistent-versions.sh)

|

||||

|

||||

verify-healing-with-root-disks: ## verify healing root disks

|

||||

verify-healing-with-root-disks: install-race ## verify healing root disks

|

||||

@echo "Verify healing with root drives"

|

||||

@GORACE=history_size=7 CGO_ENABLED=1 go build -race -tags kqueue -trimpath --ldflags "$(LDFLAGS)" -o $(PWD)/minio 1>/dev/null

|

||||

@(env bash $(PWD)/buildscripts/verify-healing-with-root-disks.sh)

|

||||

|

||||

verify-healing-with-rewrite: ## verify healing to rewrite old xl.meta -> new xl.meta

|

||||

verify-healing-with-rewrite: install-race ## verify healing to rewrite old xl.meta -> new xl.meta

|

||||

@echo "Verify healing with rewrite"

|

||||

@GORACE=history_size=7 CGO_ENABLED=1 go build -race -tags kqueue -trimpath --ldflags "$(LDFLAGS)" -o $(PWD)/minio 1>/dev/null

|

||||

@(env bash $(PWD)/buildscripts/rewrite-old-new.sh)

|

||||

|

||||

verify-healing-inconsistent-versions: ## verify resolving inconsistent versions

|

||||

verify-healing-inconsistent-versions: install-race ## verify resolving inconsistent versions

|

||||

@echo "Verify resolving inconsistent versions build with race"

|

||||

@GORACE=history_size=7 CGO_ENABLED=1 go build -race -tags kqueue -trimpath --ldflags "$(LDFLAGS)" -o $(PWD)/minio 1>/dev/null

|

||||

@(env bash $(PWD)/buildscripts/resolve-right-versions.sh)

|

||||

|

||||

build-debugging:

|

||||

@(env bash $(PWD)/docs/debugging/build.sh)

|

||||

|

||||

build: checks ## builds minio to $(PWD)

|

||||

build: checks build-debugging ## builds minio to $(PWD)

|

||||

@echo "Building minio binary to './minio'"

|

||||

@CGO_ENABLED=0 go build -tags kqueue -trimpath --ldflags "$(LDFLAGS)" -o $(PWD)/minio 1>/dev/null

|

||||

|

||||

|

|

@ -147,9 +162,9 @@ hotfix-vars:

|

|||

$(eval VERSION := $(shell git describe --tags --abbrev=0).hotfix.$(shell git rev-parse --short HEAD))

|

||||

|

||||

hotfix: hotfix-vars clean install ## builds minio binary with hotfix tags

|

||||

@wget -q -c https://github.com/minio/pkger/releases/download/v2.2.1/pkger_2.2.1_linux_amd64.deb

|

||||

@wget -q -c https://github.com/minio/pkger/releases/download/v2.2.9/pkger_2.2.9_linux_amd64.deb

|

||||

@wget -q -c https://raw.githubusercontent.com/minio/minio-service/v1.0.1/linux-systemd/distributed/minio.service

|

||||

@sudo apt install ./pkger_2.2.1_linux_amd64.deb --yes

|

||||

@sudo apt install ./pkger_2.2.9_linux_amd64.deb --yes

|

||||

@mkdir -p minio-release/$(GOOS)-$(GOARCH)/archive

|

||||

@cp -af ./minio minio-release/$(GOOS)-$(GOARCH)/minio

|

||||

@cp -af ./minio minio-release/$(GOOS)-$(GOARCH)/minio.$(VERSION)

|

||||

|

|

@ -176,15 +191,15 @@ docker: build ## builds minio docker container

|

|||

@echo "Building minio docker image '$(TAG)'"

|

||||

@docker build -q --no-cache -t $(TAG) . -f Dockerfile

|

||||

|

||||

install-race: checks ## builds minio to $(PWD)

|

||||

install-race: checks build-debugging ## builds minio to $(PWD)

|

||||

@echo "Building minio binary with -race to './minio'"

|

||||

@GORACE=history_size=7 CGO_ENABLED=1 go build -tags kqueue -race -trimpath --ldflags "$(LDFLAGS)" -o $(PWD)/minio 1>/dev/null

|

||||

@echo "Installing minio binary with -race to '$(GOPATH)/bin/minio'"

|

||||

@mkdir -p $(GOPATH)/bin && cp -f $(PWD)/minio $(GOPATH)/bin/minio

|

||||

@mkdir -p $(GOPATH)/bin && cp -af $(PWD)/minio $(GOPATH)/bin/minio

|

||||

|

||||

install: build ## builds minio and installs it to $GOPATH/bin.

|

||||

@echo "Installing minio binary to '$(GOPATH)/bin/minio'"

|

||||

@mkdir -p $(GOPATH)/bin && cp -f $(PWD)/minio $(GOPATH)/bin/minio

|

||||

@mkdir -p $(GOPATH)/bin && cp -af $(PWD)/minio $(GOPATH)/bin/minio

|

||||

@echo "Installation successful. To learn more, try \"minio --help\"."

|

||||

|

||||

clean: ## cleanup all generated assets

|

||||

|

|

|

|||

|

|

@ -210,10 +210,6 @@ For deployments behind a load balancer, proxy, or ingress rule where the MinIO h

|

|||

|

||||

For example, consider a MinIO deployment behind a proxy `https://minio.example.net`, `https://console.minio.example.net` with rules for forwarding traffic on port :9000 and :9001 to MinIO and the MinIO Console respectively on the internal network. Set `MINIO_BROWSER_REDIRECT_URL` to `https://console.minio.example.net` to ensure the browser receives a valid reachable URL.

|

||||

|

||||

Similarly, if your TLS certificates do not have the IP SAN for the MinIO server host, the MinIO Console may fail to validate the connection to the server. Use the `MINIO_SERVER_URL` environment variable and specify the proxy-accessible hostname of the MinIO server to allow the Console to use the MinIO server API using the TLS certificate.

|

||||

|

||||

For example: `export MINIO_SERVER_URL="https://minio.example.net"`

|

||||

|

||||

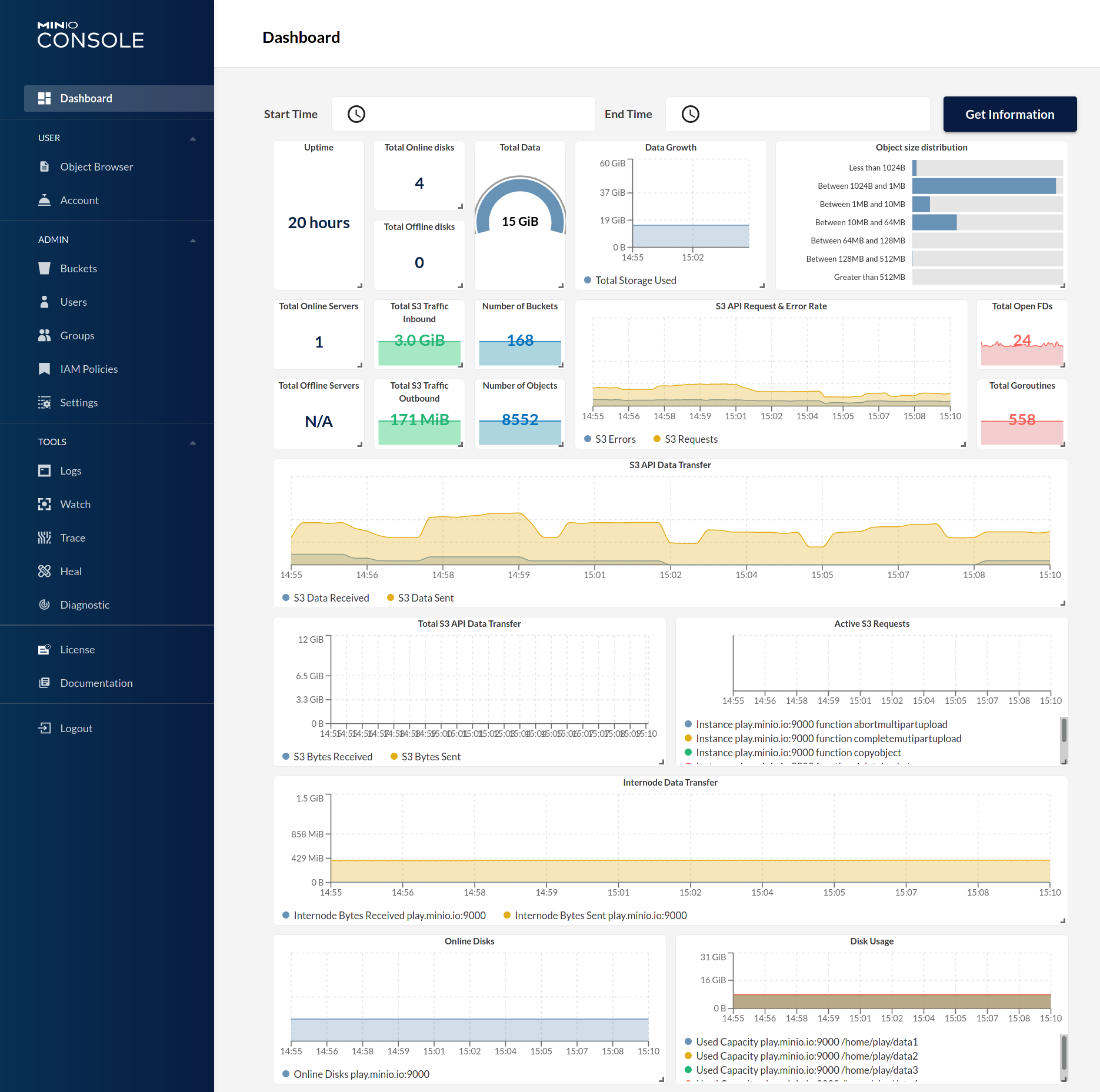

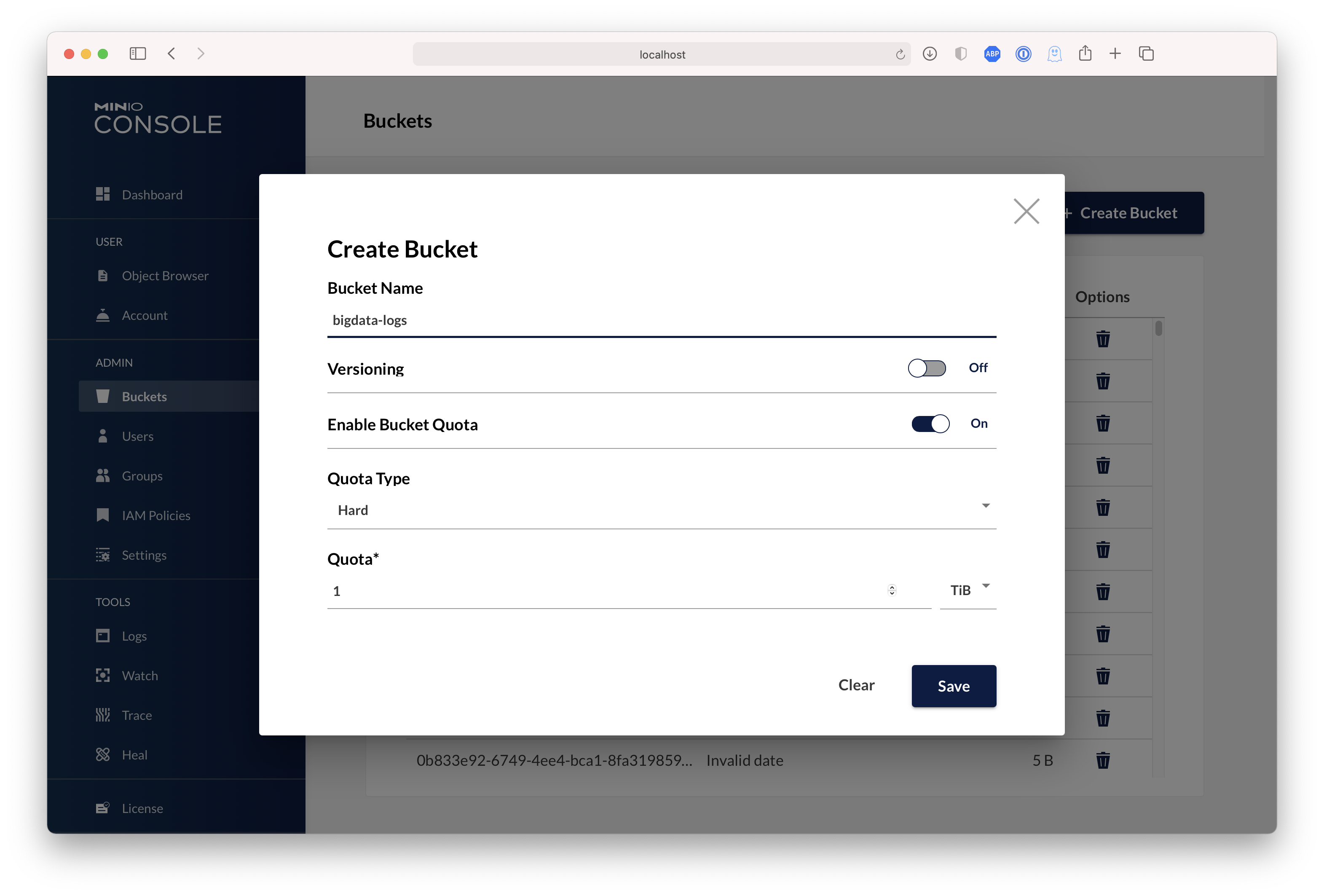

| Dashboard | Creating a bucket |

|

||||

| ------------- | ------------- |

|

||||

|  |  |

|

||||

|

|

|

|||

|

|

@ -32,6 +32,7 @@ fi

|

|||

set +e

|

||||

|

||||

export MC_HOST_minioadm=http://minioadmin:minioadmin@localhost:9100/

|

||||

./mc ready minioadm

|

||||

|

||||

./mc ls minioadm/

|

||||

|

||||

|

|

@ -56,7 +57,7 @@ done

|

|||

|

||||

set +e

|

||||

|

||||

sleep 10

|

||||

./mc ready minioadm/

|

||||

|

||||

./mc ls minioadm/

|

||||

if [ $? -ne 0 ]; then

|

||||

|

|

@ -81,11 +82,12 @@ minio server --address 127.0.0.1:9003 "http://127.0.0.1:9003/tmp/multisiteb/data

|

|||

minio server --address 127.0.0.1:9004 "http://127.0.0.1:9003/tmp/multisiteb/data/disterasure/xl{1...4}" \

|

||||

"http://127.0.0.1:9004/tmp/multisiteb/data/disterasure/xl{5...8}" >/tmp/siteb_2.log 2>&1 &

|

||||

|

||||

sleep 20s

|

||||

|

||||

export MC_HOST_sitea=http://minioadmin:minioadmin@127.0.0.1:9001

|

||||

export MC_HOST_siteb=http://minioadmin:minioadmin@127.0.0.1:9004

|

||||

|

||||

./mc ready sitea

|

||||

./mc ready siteb

|

||||

|

||||

./mc admin replicate add sitea siteb

|

||||

|

||||

./mc admin user add sitea foobar foo12345

|

||||

|

|

@ -109,11 +111,12 @@ minio server --address 127.0.0.1:9003 "http://127.0.0.1:9003/tmp/multisiteb/data

|

|||

minio server --address 127.0.0.1:9004 "http://127.0.0.1:9003/tmp/multisiteb/data/disterasure/xl{1...4}" \

|

||||

"http://127.0.0.1:9004/tmp/multisiteb/data/disterasure/xl{5...8}" >/tmp/siteb_2.log 2>&1 &

|

||||

|

||||

sleep 20s

|

||||

|

||||

export MC_HOST_sitea=http://foobar:foo12345@127.0.0.1:9001

|

||||

export MC_HOST_siteb=http://foobar:foo12345@127.0.0.1:9004

|

||||

|

||||

./mc ready sitea

|

||||

./mc ready siteb

|

||||

|

||||

./mc admin user add sitea foobar-admin foo12345

|

||||

|

||||

sleep 2s

|

||||

|

|

|

|||

|

|

@ -0,0 +1,127 @@

|

|||

#!/bin/bash

|

||||

|

||||

# This script is used to test the migration of IAM content from old minio

|

||||

# instance to new minio instance.

|

||||

#

|

||||

# To run it locally, start the LDAP server in github.com/minio/minio-iam-testing

|

||||

# repo (e.g. make podman-run), and then run this script.

|

||||

#

|

||||

# This script assumes that LDAP server is at:

|

||||

#

|

||||

# `localhost:1389`

|

||||

#

|

||||

# if this is not the case, set the environment variable

|

||||

# `_MINIO_LDAP_TEST_SERVER`.

|

||||

|

||||

OLD_VERSION=RELEASE.2024-03-26T22-10-45Z

|

||||

OLD_BINARY_LINK=https://dl.min.io/server/minio/release/linux-amd64/archive/minio.${OLD_VERSION}

|

||||

|

||||

__init__() {

|

||||

if which curl &>/dev/null; then

|

||||

echo "curl is already installed"

|

||||

else

|

||||

echo "Installing curl:"

|

||||

sudo apt install curl -y

|

||||

fi

|

||||

|

||||

export GOPATH=/tmp/gopath

|

||||

export PATH="${PATH}":"${GOPATH}"/bin

|

||||

|

||||

if which mc &>/dev/null; then

|

||||

echo "mc is already installed"

|

||||

else

|

||||

echo "Installing mc:"

|

||||

go install github.com/minio/mc@latest

|

||||

fi

|

||||

|

||||

if [ ! -x ./minio.${OLD_VERSION} ]; then

|

||||

echo "Downloading minio.${OLD_VERSION} binary"

|

||||

curl -o minio.${OLD_VERSION} ${OLD_BINARY_LINK}

|

||||

chmod +x minio.${OLD_VERSION}

|

||||

fi

|

||||

|

||||

if [ -z "$_MINIO_LDAP_TEST_SERVER" ]; then

|

||||

export _MINIO_LDAP_TEST_SERVER=localhost:1389

|

||||

echo "Using default LDAP endpoint: $_MINIO_LDAP_TEST_SERVER"

|

||||

fi

|

||||

|

||||

rm -rf /tmp/data

|

||||

}

|

||||

|

||||

create_iam_content_in_old_minio() {

|

||||

echo "Creating IAM content in old minio instance."

|

||||

|

||||

MINIO_CI_CD=1 ./minio.${OLD_VERSION} server /tmp/data/{1...4} &

|

||||

sleep 5

|

||||

|

||||

set -x

|

||||

mc alias set old-minio http://localhost:9000 minioadmin minioadmin

|

||||

mc ready old-minio

|

||||

mc idp ldap add old-minio \

|

||||

server_addr=localhost:1389 \

|

||||

server_insecure=on \

|

||||

lookup_bind_dn=cn=admin,dc=min,dc=io \

|

||||

lookup_bind_password=admin \

|

||||

user_dn_search_base_dn=dc=min,dc=io \

|

||||

user_dn_search_filter="(uid=%s)" \

|

||||

group_search_base_dn=ou=swengg,dc=min,dc=io \

|

||||

group_search_filter="(&(objectclass=groupOfNames)(member=%d))"

|

||||

mc admin service restart old-minio

|

||||

|

||||

mc idp ldap policy attach old-minio readwrite --user=UID=dillon,ou=people,ou=swengg,dc=min,dc=io

|

||||

mc idp ldap policy attach old-minio readwrite --group=CN=project.c,ou=groups,ou=swengg,dc=min,dc=io

|

||||

|

||||

mc idp ldap policy entities old-minio

|

||||

|

||||

mc admin cluster iam export old-minio

|

||||

set +x

|

||||

|

||||

mc admin service stop old-minio

|

||||

}

|

||||

|

||||

import_iam_content_in_new_minio() {

|

||||

echo "Importing IAM content in new minio instance."

|

||||

# Assume current minio binary exists.

|

||||

MINIO_CI_CD=1 ./minio server /tmp/data/{1...4} &

|

||||

sleep 5

|

||||

|

||||

set -x

|

||||

mc alias set new-minio http://localhost:9000 minioadmin minioadmin

|

||||

echo "BEFORE IMPORT mappings:"

|

||||

mc ready new-minio

|

||||

mc idp ldap policy entities new-minio

|

||||

mc admin cluster iam import new-minio ./old-minio-iam-info.zip

|

||||

echo "AFTER IMPORT mappings:"

|

||||

mc idp ldap policy entities new-minio

|

||||

set +x

|

||||

|

||||

# mc admin service stop new-minio

|

||||

}

|

||||

|

||||

verify_iam_content_in_new_minio() {

|

||||

output=$(mc idp ldap policy entities new-minio --json)

|

||||

|

||||

groups=$(echo "$output" | jq -r '.result.policyMappings[] | select(.policy == "readwrite") | .groups[]')

|

||||

if [ "$groups" != "cn=project.c,ou=groups,ou=swengg,dc=min,dc=io" ]; then

|

||||

echo "Failed to verify groups: $groups"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

users=$(echo "$output" | jq -r '.result.policyMappings[] | select(.policy == "readwrite") | .users[]')

|

||||

if [ "$users" != "uid=dillon,ou=people,ou=swengg,dc=min,dc=io" ]; then

|

||||

echo "Failed to verify users: $users"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

mc admin service stop new-minio

|

||||

}

|

||||

|

||||

main() {

|

||||

create_iam_content_in_old_minio

|

||||

|

||||

import_iam_content_in_new_minio

|

||||

|

||||

verify_iam_content_in_new_minio

|

||||

}

|

||||

|

||||

(__init__ "$@" && main "$@")

|

||||

|

|

@ -55,6 +55,11 @@ __init__() {

|

|||

|

||||

go install github.com/minio/mc@latest

|

||||

|

||||

## this is needed because github actions don't have

|

||||

## docker-compose on all runners

|

||||

go install github.com/docker/compose/v2/cmd@latest

|

||||

mv -v /tmp/gopath/bin/cmd /tmp/gopath/bin/docker-compose

|

||||

|

||||

TAG=minio/minio:dev make docker

|

||||

|

||||

MINIO_VERSION=RELEASE.2019-12-19T22-52-26Z docker-compose \

|

||||

|

|

|

|||

|

|

@ -45,7 +45,8 @@ function verify_rewrite() {

|

|||

"${MINIO_OLD[@]}" --address ":$start_port" "${WORK_DIR}/xl{1...16}" >"${WORK_DIR}/server1.log" 2>&1 &

|

||||

pid=$!

|

||||

disown $pid

|

||||

sleep 10

|

||||

|

||||

"${WORK_DIR}/mc" ready minio/

|

||||

|

||||

if ! ps -p ${pid} 1>&2 >/dev/null; then

|

||||

echo "server1 log:"

|

||||

|

|

@ -77,7 +78,8 @@ function verify_rewrite() {

|

|||

"${MINIO[@]}" --address ":$start_port" "${WORK_DIR}/xl{1...16}" >"${WORK_DIR}/server1.log" 2>&1 &

|

||||

pid=$!

|

||||

disown $pid

|

||||

sleep 10

|

||||

|

||||

"${WORK_DIR}/mc" ready minio/

|

||||

|

||||

if ! ps -p ${pid} 1>&2 >/dev/null; then

|

||||

echo "server1 log:"

|

||||

|

|

@ -87,17 +89,12 @@ function verify_rewrite() {

|

|||

exit 1

|

||||

fi

|

||||

|

||||

(

|

||||

cd ./docs/debugging/s3-check-md5

|

||||

go install -v

|

||||

)

|

||||

|

||||

if ! s3-check-md5 \

|

||||

if ! ./s3-check-md5 \

|

||||

-debug \

|

||||

-versions \

|

||||

-access-key minio \

|

||||

-secret-key minio123 \

|

||||

-endpoint http://127.0.0.1:${start_port}/ 2>&1 | grep INTACT; then

|

||||

-endpoint "http://127.0.0.1:${start_port}/" 2>&1 | grep INTACT; then

|

||||

echo "server1 log:"

|

||||

cat "${WORK_DIR}/server1.log"

|

||||

echo "FAILED"

|

||||

|

|

@ -117,7 +114,7 @@ function verify_rewrite() {

|

|||

go run ./buildscripts/heal-manual.go "127.0.0.1:${start_port}" "minio" "minio123"

|

||||

sleep 1

|

||||

|

||||

if ! s3-check-md5 \

|

||||

if ! ./s3-check-md5 \

|

||||

-debug \

|

||||

-versions \

|

||||

-access-key minio \

|

||||

|

|

|

|||

|

|

@ -15,13 +15,14 @@ WORK_DIR="$PWD/.verify-$RANDOM"

|